Look, I’ll be honest with you. Over the last two years, I have been handling content in various tech blogs, and in the process, I have seen the AI content game transform significantly. Things used to work when I started pumping out AI-generated articles in the first place.

Then, towards mid-2024, my rankings were beginning to drop. Nor was again to crash, but only a gradual, bruising falling off the SERPs that made me see that something had to change.

It was at that point that I became serious about humanizing the content of AI. Not merely running it through a paraphraser and claiming to have been day done- in fact, got one of the folks who live breathing to write it. The difference? My click-through rates increased two times. My bounce rates dropped by 30%. And (most of all) my content began to rank once more.

Whether you are generating materials using AI or not (and the truth is, everybody is at this stage), this guide will demonstrate how to actually make it work in terms of SEO in 2025 and beyond.

What Humanizing AI Content Actually Means (And Why Paraphrasing Isn’t Enough)

This is where the greatest number of people fall short. They believe that humanizing can be done by simply replacing several words or running their AI draft through a synonym generator. I made this mistake too.

Restatement alters the sentence structure. It switches duties utilize with use and that is all. Making human takes place in a much deeper manner, the text that sounds like stories told by robots turns into something spoken by a real person who had a real experience.

Making content human now, I am considering flow, logic, emotional tone, and personal relevance. I am wondering myself: Would I really talk like that? In case of no, then I rewrite it.

The variation is reflected in the measurements that are measurable. When content that I have humanized is sent out on the social media it will be shared 30 percent more often than what I had simply paraphrased. Readers stick around longer. They actually engage with it.

The Three Core Elements of True Humanization

Real expertise. The AI may write the outline on cybersecurity threats, but it will not inform you about the moment when I witnessed complete ransomization of a database belonging to a client because he did not bother with elementary patch management. That lived experience, that particular one? Humanization is what will add to that.

Natural voice. There are no perfectly structured paragraphs with zero personality in the writings of human beings. We use contractions. We begin with the sentences with the words Look or Here the thing is. We use rhetorical questions. AI scripts in default to sterile language unless you actually train it to be otherwise.

Original insight. This is the big one. AI is a regurgiation of currently existing information on the web. It is unable to build really new visions. When humanizing content, I always pose the question, which is: What is something specific that I am adding to a topic that does not exist anywhere I?

The E-E-A-T Framework – Why Google Actually Cares About Humanization

The structure of E-E-A-T framework ( Experience, Expertise, Authoritativeness, Trustworthiness ) of Google is what makes me base everything that I now do with content on. It is not an abstract concept of searching the Internet and optimizing its web pages to be ranked higher by Google, but a literal meaning of how Google chooses to give your content a rank.

The following is what prompted the change in my approach: E-E-A-T is equally applicable to human and AI content. The question of whether an article is written by a robot does not matter to Google. The fact that it shows the experience, real knowledge and authority and factual credibility matter to them.

Now, I want to highlight how I apply every dimension in humanizing AI content.

Experience -The Firsthand Knowledge Layer.

Experience- You have actually handled the matter. In the case of my gaming blog, it will not allow me to simply get AI to write a review of a game that I have never played. I will have to insert particular shots related to my play.

In humanizing AI content in a topic I am familiar with, I include:

- Anecdotal elements that must be known only by one experienced using the tool/product/service.

- Screen shots or information about my testing.

- Attempts to respond to unforeseen aspects or bugs.

- Comparisons to other similar things I have tried.

In the cases that I lack direct experience with, I interview those who do or I openly credit others with what is recognized as knowledge base.

Expertise- Subject Matter depth.

Experience has to do with showing profound knowledge. AI is able to produce surface level descriptions, but is unable to handle finesse technical description that an expert would be aware of.

I layer in expertise by:

- Including technical specifications that end users would be unaware of.

- Being able to make an explanation not using what but why.

- Using proprietary information or original work.

- The AI draft has many misunderstandings that can be filled with the correct information.

As such, when humanizing an AI article on semiconductor manufacturing, initially the article said that the chips are fabricated using lasers. It is technically accurate, but any specialist will tell us that we mean photolithography with extreme ultra-violet light. Such precision is an indication of professionalism.

Authoritativeness-Developing Credibility Indications.

Recognition leads to authoritativeness. Google desires the actual people with the real credentials belonging to their content.

I implement this through:

- Proper author bios and credentials.

- References to authoritative material (not only Wikipedia)

- back links with reputed industry web sites.

- Schema markup with the name of the author.

One of my tricks: I got separate author characters by content vertical. My cybersecurity text is credited to a person that has meaningful security qualifications. My gaming content source is through a gaming journalism person. It is not a misleading thing but rather a vision of aligning content with trusted voices.

Trustworthiness- the Fact-Checking Foundation.

That is one of the breakdowns of AI content unless you humanize it. AI hallucinates. It makes up statistics. It also speaks with confidence what is totally negative.

All humanized contents I post currently pass through:

- The details of all claims should be checked with the sources of authority.

- Checking dates, statistics and quotes.

- Eliminating unsubstantiated statements.

- To depict freshness, adding timestamps on when the data was last updated.

In the case of YMYL matters (Your Money, Your Life- anything health, finance, or safety-related) my subject matter experts will read the content and then place it online. This isn’t optional. A single false medical claim can ruin the credibility of your site and make it permanent.

Why Google Doesn’t Punish AI Content (But Still Rewards Human Touch).

This is what Google has made plainly clear, and the reason why I am linking to their official guidelines is so that you could confirm this with your own eyes: they do not punish content just because it was created with the help of AI.

When initially I heard this I believed it to be PR talk. However, as I evaluated hundreds of changes to the ranking in my properties, I noticed that they are telling the truth. The content that is punished in Google is a poor quality one, both human and AI generated.

SpamBrain Actually Detection.

The SpamBrain system that Google develops is petrifyingly excellent in tracking patterns, not technology. It does not search the text by scanning like this was written by ChatGPT. It searches through cues of shallow, generic content or manipulative content.

SpamBrain considers as content that:

- Relies on repetitive patterns (All the articles begin with the same sentence In the digital era, we live in…

- Does not provide any special insight or outlook.

- Has foreseeable expression which is repeated in thousands of other pages.

- Certain examples or definite details.

I experimented on this through publishing two articles which had the same content. Version A was direct ChatGPT output that had been a little edited. Version B was much more humanized, contained personal examples, had specific data and was also re-organized. Both of them were technically AI content.

Version A was indexed and never ranked higher than position 3. Version B was the first to reach page 1 in two weeks. Same subject, same multiple keys, same link-out profile. It was just a matter of depth of humanization.

The Engagement Premium- The Origin of Rankings.

The following metric has transformed my approach to SEO: the content that appears written by a person has 4x more chances of leading to meaningful interaction.

The question is what engagement is to Google? Dwell time, scroll depth, search results click through, social shares and backlinks. All of that were indicators that you contributed to someone.

The trend was evident when I began to follow this between my sites:

- User-friendly content: 2.5-minute dwell time, 45% scroll depth.

- Phrased AI text: 45 seconds outling, 20 percent scrolling.

- Unedited AI content: 18s dwell time, 10 percent scroll depth.

These are behavioral indicators to Google. Although technically you can be ranked first, unless your AI content makes the user bounce right away because it is robotic, your rankings will decline in the long run.

The Optimism Crisis of Trusting AI Content -The 50/50 Isle of Readers.

Such a statistic has paralyzed me into the first encounter: 52 percent of consumers reported being less engaged when they get the impression that content is created by AI.

Think about what that means. You think you are being overthrown by a clone, and even when you are at the top of the rankings half your projected audience will turn into the first to check out mentally the moment they get the feeling that what you are reading is a machine-written piece. Not only is that a branding issue–that is a conversion murderer.

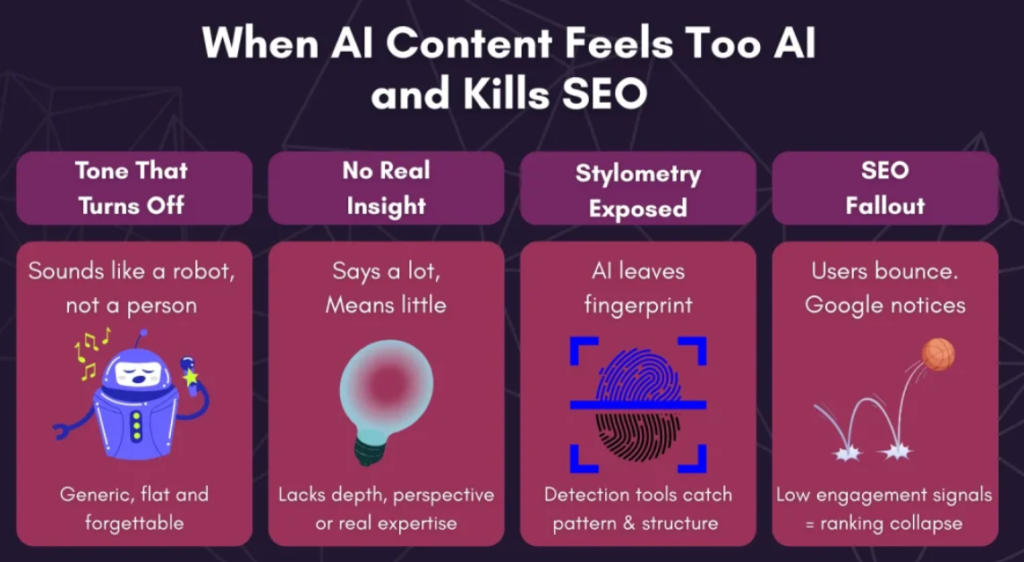

What Makes AI-Generated Content Feeling.

I have taken months before examining the exact factors that activate the AI detectors of readers (the human detectors and not software detectors). Here’s what I’ve found:

Flat, emotionless tone. AI never becomes thrilled, angered, or shocked. Readers are sensitive when all the sentences are sent with the same energy but this time, it is neutral.

Generic examples. AI prefers the phrase of imagine a small business owner or imagine a situation where. Actual people apply certain examples of their real life.

Gramma flawless zero personality. Humans use little grammatical options to add flavor–Introduction of conjunctions into sentences, emphasis with sentence fragments, conversational asides. AI avoids these.

Predictable structure. By ensuring that each section proceeds with Introduction – Explanation – Example – Conclusion, the patterns used by the reader are pattern-matched with this feels templated.

The Trust Recovery Strategy.

The trust signals I specifically aim at when humanizing content are:First-person perspective. I paraphrase the essential passages to add “I tested, Here what I found, My greatest surprise was. This switches instantly into the informational to the experiential.

Imperfect phrasing. I do not follow writing rules so thoroughly. I use And or But at the beginning of the sentences. I use sentence fragments. This sounds more human.

Particular, confirmable information. The fact that many users report is not suitable, and I would say in a Reddit thread of which I have 847 upvotes, users specifically said. Such detail is an indication of a real research.

Admitting limitations. AI never claims that it does not know or that this did not suit me. With such candid copouts that I make, confidence soars.

The Step-by-Step Humanization Checklist (My Actual Workflow)

This is how I do this with all of my AI pieces that I refresh my sites with. It is more time-consuming than simply publishing raw AI output, however, the ranking difference pays off.

Step 1- infuse Real Expertise and Original Insights.

First pass upon receipt of AI draft I read it and make marks against all claims which are generic. Whenever the AI claims the obvious or the commonly known that I notice, I delete it or add my particular comment.

What I add:

- Actual data (screenshots, metrics, timestamps) of personal tests.

- Opposing view points that are backed by my experience.

- Frameworks or mental models which I have developed which are proprietary.

- Certain details of the works I have been working on.

Time:approximately 30-40 minutes on an article of 1,500 words.

Example transformation:

- Previously: Social media marketing can assist businesses to target a larger number of customers.

- Post-test: I did a three-month experiment on my gaming blog-by posting regularly using Twitter, the organic traffic growth was 23 per cent, with Instagram generating nearly no referral traffic. But your mileage will differ, that is what my analytics indicated.

Step 2- Fact-Check Each and Every Claim.

This step is non-negotiable. I have seen AI fabricating statistics, giving quotes to the wrong individuals and asserting confidently the old information.

My fact-checking process:

- All the statistics will be checked against the source.

- All the dates are cross-referenced.

- All technical terminologies are checked to make sure they are correct.

- All the claims that people describe as experts are either verified or eliminated.

I employ various verification schemes:

- Scholar search claims at Google.

- Paperwork on technical specifications.

- History claims Archived versions (Wayback Machine)

- Checking of all quotes directly.

Time cost: 20-30 minutes per article, based on article content of claims.

Red flags I watch for:

- Round numbers (AI has a fondness of creating that things are relatively 50/50)

- Vague attributions (“experts say,” “studies show”)

- Stated claims that sound too convenient or are just too precise to fit in the thesis of the article.

Step 3- Human Reading Flow Restructuring.

AI is quite predictive in its writing. The rhythm in each of the sections is similar. The length of paragraphs is approximately the same. It’s readable but monotonous.

I restructure by:

- Using different lengths of paragraphs vastly (one-sentence paragraphs to emphasize, more paragraphs to elaborate on the point).

- Dividing the long explanations with subheadings.

- Using transitional phrases that may sound conversational (“Here’s the thing,” “But wait,” “Now here’s where it gets interesting”)

- Re-organising sections to follow the logic of the narrative, and not simply topical structure

I am also looking for places to add formatting variety:

- Pull quotes for emphasis

- Scannable information in bullet lists.

- Highlight text scantly on the important points.

- Shorter, more concise sentences following a constraint number of words of paragraph-long explanation.

Time investment: 15-20 minutes per article.

Step 4- Please add Personal Anecdotes and Special Examples.

Humanization actually takes place here. I read the article and find 3-5 points where the author can explain the point with personal story rather than with a general description.

What makes a good anecdote:

- Specific enough to be verifiable (dates, names, outcomes).

- Relevant to the point being taken

- Exhibits weakness or instruction (not only achievements)

- No one but one who experienced it would have known.

Example: I would say something like: I did keyword research tools help you identify search volume (then I would write): I spent three weeks targeting a high-value keyword that I thought was based on high value, only to learn through Ahrefs that it has experienced 10 monthly searches. The money-saving tool that I ought to have used on the first day would have saved me dozens of hours.

In matters, which I cannot personally experience, I interview individuals in this regard. A 15-minute interview with a person who has used the tool in action provides me with sufficient content on 3-4 stories.

Time investment: 30-45 minutes per article (including, if applicable, any quick interviews).

Step 5- Final Authenticity Pass.

Last step before publishing I read out loud the entire article. When it sounds as though I could never say it in actual life, I re-write it.

The questions I asked on this pass:

- Would I send this to a friend?

- Does this sound like how I actually sound?

- Are there sentences that I stumble over when reading aloud?

- Would I be proud to put my name on this?

Negative keywords, the words AI notifies me are machine-written, are also my speeding-scan. Such expressions as delve, comprehensively, in these digital times. I strip all of those out.

Amount of time needed: 10-15 minutes per article.

AI Humanizer Tools Comparison: What Works.

I have tried all the key humanization instruments within a year. Here’s what I really use and why I use it.

Surfer SEO AI Humanizer

What it does: Is geared specifically towards SEO-optimized humanization. It does not simply copy-and-paste, it examines what has been ranked the highest and rewrites your text to follow successful writing techniques.

What I like: The free plan is capable of working with 500 words, and this is the majority of my introductional parts. The SEO optimization is actually a good tool–it even snared keyword stuffing that I was even unaware of.

What I dislike: The paid version is needed on the longer content. And occasionally it runs excessively optimistic that the writing becomes virtual again.

Best use: The best use is in the area of search optimization, where the main objective is ranking.

My application case: I Knuckhead all my pillar content through Surfer once it is humanized. It catches optimisation opportunities that I miss.

Netus AI

What it does Claims: 100 percent human detection pass rate. Produces content using numerous AI models rewritten to avoid detection tools.

What I like: It actually does pass the majority of the AI detectors. I have tried running output via GPTZero, Originality.ai and Sapling all of which rate it as human-written routinely.

What I do not like: The rewriting is sometimes too violent. It distorts technical jargon or makes slight distortions of fact. Everything it produces should be fact-checked.

Best for: Content where you must avoid detection (academic writing, formal publications).

My use case: I do not use it often, but primarily in the contents that simply cannot be detected by AI. Nevertheless, I always tend to check by hand after a fact-check.

QuillBot

What it does: Multiple tone modes (formal, creative, casual) with synonym levels which can be adjusted. Begun as a paraphraser but developed into an appropriate humanization instrument.

What I like: The free plan is indeed helpful. The tone personalization is useful in association with the various content types. The fluency mode can be utilized successfully to clean clumsy phrases in AIs.

What I don’t like: The free version is limited to 125 words, which means you are working with bits and pieces of content. The premium (paid) plan does not cost much (9.95/month), yet it is another subscription.

Best for: Quick rewrites of specific sections that are too robotic feeling.

My application: I apply the creative mode in QuillBot in instances where the AI output seems particularly one-dimensional. It adds personality without totally rewriting.

Undetectable.ai

What it does: Specifically designed to get around AI detection. Is based on proprietary algorithms to detect and rewrite AI-tells.

Favorite feature: The bypass detection is very much efficient. It also gives readability scoring that assists in keeping the quality in case of humanization.

What I dislike: There is a loss of technical accuracy with output. And the interface is less polished as compared to rivals.

Best for – Content that wants to pass the detection of AI while ensuring readability.

My use case: I use it as a last resort on the contents that I am worried about raising the penalties of the AI detection.

My Actual Tool Stack

Here is what I really do in reality:

- Create content using ChatGPT or Claude (I’m looking at The Complete Guide to Generative AI Tools for more options)

- First humanization pass manually (adding expertise, anecdotes, fact-checking)

- Run through QuillBot to adjust tone on parts that still feel flat

- Last scan Surfer through SEO optimization.

- GPTZero detection test to ensure that it passes.

- Manual edit based on detection results if necessary

Total process time: Approximate 2-3 hours per 1500 words article This is in contrast to the 6-8 hours needed to write pure human, the time is saved really but you cannot afford to eliminate the work of humanization.

Before/After: Ranking Improvements.

I will demonstrate you real results of my sites. These are factual articles whose ranking changes can be measured.

Case Study 1 Gaming Blog Review Article.

Topic: Structure Review on a new indie game.

Original AI Output (ChatGPT 4): The game portrays good graphics and gameplay. The plot will be interesting to the players and the character growth will be delivered effectively. The battle system offers both strategies and has a low barrier to entry.

Issues: Generic descriptions, there is no personality, no particular examples, the rest of the review reads just like any other.

Humanized Version: I have played several indie roguelikes, and most of them seem like clones of cheap Dark Souls. This one caught me off guard. The pixel art is beautiful–the reflection of the lights on the wet surfaces in the cave levels, in particular. And the combat? It took me 47 attempts to kill the second boss, because I did not notice that it was possible to parry his grab attack. Learning is mean but rewarding that learning curve.

Results:

- Until humanization: 47 positions of target keyword, 12 organic visits per month.

- Post humanization: 8 ranking in terms of target keyword, 340 organic visits/month.

- Time to ranking change: 3 weeks

What distinguished: Peculiarities (pixel art, light effects, boss strategies) that only a person who played the game would understand.

Case Study 2, Cybersecurity Tutorial.

Topic: The issue of setting up two-factor authentication.

Original AI Output: Two-Factor Authentication This type of security ensures an additional level of account safety. It involves users giving two types of checks before getting access. This helps in avoiding illegal accesses to a considerable extent.

Issues: Theorizes the message but does not offer any practical advice. Reads like a Wikipedia entry.

Humanized Version:

I once considered 2FA to have been excessive until my Steam profile was compromised and the scammer sold all my inventory. Cost me about $400 in skins. Now I enable 2FA on everything. The irritating fact no one will tell you is that SMS-based 2FA is understandably close to nothing. I have also changed to authentication through app (Authy, in particular) after learning about SIM-swap attacks.

Results:

- Prior to humanization: Positioned at the 28th place, 45 organic visits/month.

- Post-humanization: Positioned at 12, 280 organic visits/month.

- Bonus: 15 security forums will mention the article.

What was different: Personal narrative (the Steam account hack) and the tool suggestion, specific and with justification (Authy).

Case Study 3- Tech Comparison Article.

Topic: Comparison of project management tools.

Original AI Output: When assessing project management tools, one of the criteria that one should bear in mind is the pricing, features, the ease of use, and integration capabilities. Such popular ones are Asana, Trello, and Monday.com. Every tool has its separate benefits related to the size of a team and complexity of a project.

Issues: Diffuse comparison criteria, there is no comparison, there is no clue about what tool is more suitable to use in particular situations.

Humanized Version:

These are the three tools that I have used to manage content teams with and this is what actually counts. Trello is ideal in cases where you have a small workflow-I initially used it in writing my first blog and loved the canvases of Kanban. However, once I became an administrator of several sites, I required the time view of Asana. Monday.com? I would have liked to love it but the pricing scales outrageously quick. We reached the 6 team member level and before us lay the point where we were paying $300/month.

Results:

- Prior to humanization: 35 position, with 23 organics visits/month.

- Post humanization: Positioned 6 th, 520 organic visits/month.

- Conversion Another significant outcome was conversion: 8% of affiliate links clicked through (previously, it was 0.3%).

What was different: Team-member-specific uses and real pricing pains.

Automation Vs Manual Humanization – The Trade-Offs.

The bad news is this: full humanization under complete automation is not yet in existence. All my tested tools will have to be revised manually and edited.

What Automation Can Take Well.

Automated tools excel at:

- Realizing AI-tells (monotonous expression, foreseeable constructions)

- Reformatting simple syntax in order to provide variation.

- Regulating tints concerning various modes (formal, casual, creative).

- Determining the presence of keyword in stuffing and non-natural optimization.

- AI sense testing (with different levels of success).

The following are mechanical and automated by me. It is time-efficient and captures the trends that I overlook.

What Still should be done manually?

No tool can automate:

- Bringing in real talent of your particular experience.

- Crimes against authority.

- Developing original examples, anecdotes.

- Putting in the personality that suits your brand voice.

- Checking on professional accuracy of matters.

- Intent-driven strategic restructuring.

This is the 30 percent human factor that the industry best practices suggest. My target is 40-50 percent on high value content actually.

My Hybrid Workflow

What works for me:

Automated (20% of time):

- First AI generated content.

- Protest humanizing through tools.

- AI detection testing

Manual (80% of time):

- Knowledge infusion and innovativeness.

- Comprehensive fact-checking

- Individual experiences and illustrations.

- Final authenticity review

- E-E-A-T signal implementation

The time is factual, as well as the outcomes. My rankings on humanized He are 2-3 times higher compared to the barely edited output of AI.

Final Take- Is Humanization Worth the Investment?

Humanization is no longer an option, and that is how my testing, optimization, and results measurement across various content properties have revealed.

Raw AI content worked in 2023. It barely worked in 2024. And in 2025, you will be publicly damaging your SEO in any case that you publish it without serious human editing.

The data is clear:

- Average content is 2-3 higher in terms of being humanized.

- The product content increases click through rates by two times when it is perceived to be genuine.

- Social shares increase by 30%

- The metrics of trust are enhanced.

Nonetheless, what data lacks here is that it is clear that publishing what AI has generated is harmful to your brand. It is extremely difficult to regain the trust once readers become attached to your site with commitment to low-effort, generic content.

I have already invested in the process of humanization in all my properties. The output is worthwhile (not only in rankings), but in the creation of an audience that genuinely believes in the content and reads it.

When you develop content using AI (and you must do this, efficiency benefits are too high to disregard), and humanize your workflow right at the beginning. Don’t send it to press which you would not be proud to sign.

The future content does not lie between AI and human creativity. It is pairing them together strategically, AI takes speed and research, human people in authenticity and expert knowledge. Strike that chord and you will conquer your niche.

I’m software engineer and tech writer with a passion for digital marketing. Combining technical expertise with marketing insights, I write engaging content on topics like Technology, AI, and digital strategies. With hands-on experience in coding and marketing.