I tell you, I did not wake up one morning and started to be obsessed with edge computing processors. It began when I was attempting to understand why a video surveillance system of my client, which was a factory, was slowing down at the peak hours. It turns out that the processor was not capable of supporting real-time analytics without overheating.

That kick-stated me into the industry of Intel Atom chip, ARM, and thermodynamic nightmares.

And if you are creating industrial IoT gateways, or deploying autonomous robots, or just interested in what goes behind the scenes of the edge device, this is what has become known to me when I started researching edge computing processors: Intel Atom, Xeon 6, and ARM-based ones. None of that nonsense–the stuff that counts.

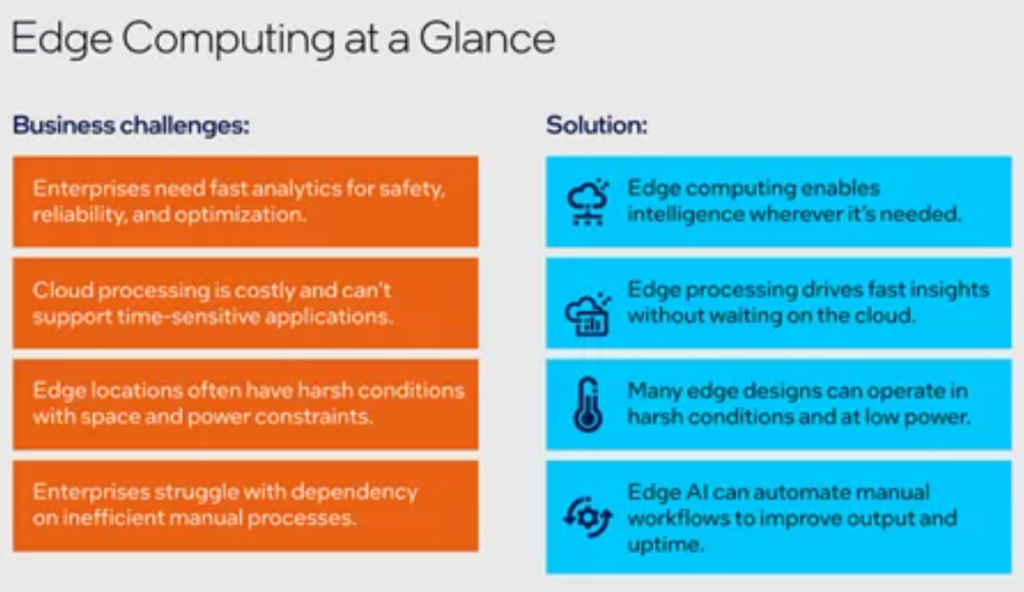

What Makes Edge Computing Processors Different?

That is what most people overlook, edge processors do not simply represent smaller cloud servers. They compute data directly on the point of their production- factory floors, surveillance cameras, as part of autonomous robots. It means that they should be power-efficiency, hot-stable, and make decisions in real-time, not to wait to receive some reply on clouds.

The simplest test I did regarding this idea was with a basic case of running a run-on duty object detection on a regular CPU and an edge optimized processor. The difference? Roundtrip time on the clouds was 200+ milliseconds. Edge processing? Under 50ms. To the robots, a lack of such a latency gap is everything to avoid collisions or to measure the quality of manufactured items on an assembly line.

The Three Main Players

There are three architectures of the edge computer processors you are considering when deciding:

Intel Atom x6000E: Fanless low power (2-15W typical) designed applications in industry gateways and small-sized devices. I have observed these operating 24 hour shifts in dust filled manufacturing areas without active cooling.

Intel Xeon 6: The steamer–up to 144 cores, optimized to make high-throughput edge servers (5G base stations, video transcoding, and network firewalls) and edge computers. Power draw is about 50W+ yet there is muscle at the processing end.

ARM-arm solutions (Cortex-A320, Ethos-U85): Energy efficient RISC based architecture. They use less power (20-30 percent consumed) than the x86 equivalents on similar work. Ideal with battery powered sensors, wearables and ultra-constrained.

Industrial IoT Where Edge Processors Actually Live

The talk at most edge computing remains abstract. I have experienced real use cases, which I would like to take you through.

Video Surveillance Systems

I was employed in a warehouse facility with 50+ cameras. They were slowing down the bandwidth in the clouds and responding slowly to alerts. Each cluster of cameras has moved Intel Atom x6000E processors with Movidius VPUs (vision processing units) on them.

The result? Local object detection, facial recognition and anomaly flagging- all run on the device. Critical alerts only were sent to the cloud. Bandwidth loaded decreased by 70 percent and the time taken to respond to security incidents reduced to less than 2 seconds as compared to 5-10 seconds.

The reason why it was successful is that Movidius VPUs are vision workload specific. They do video inference effectively and do not consume power caches.

Autonomous Robots in Manufacturing

It is at this point that thermal management becomes a problem. Robots working in factories operate in either hot or cold environments- machinery heat, dust and humidity. Active cooling (fans) will not last long under such conditions.

The solution that I have already observed: Intel Core i5-1350P processors (Raptor Lake-P architecture) in fanless housing, passive cooled. These are chips that are performance and thermal efficient. Getting sufficient processing power to do simultaneous sensor fusion, path planning and avoid obstacles, all in a 15-28W power budget.

Through one robotics implementation, which I researched, it operated 18 months without thermal throttling. The secret? Industrial rated processors (0degC to 60degC) with heat sink configurations that dissipate heat (rather than discharge it) were used.

Industrial IoT Gateways

Gateway devices collect information on hundreds of sensors, such as temperature, vibration, pressure, etc., and require a local processing engine to compress the data into summaries to be sent to the cloud. In this respect, I learned the following considerations with regard to selecting the appropriate processor:

- Light loads (basic filtering, aggregation): Intel Atom x6000 E can easily support 100 or more sensor streams at 6-10W.

- Speech-level loads (local ML inference, protocol translation): Intel Core i5 versions get to use 15-28W power budgets.

- Most workloads (video analytics): Intels Xeon 6 is required, but will require 50W and active cooling.

The catch? Designs with fans are not favored in most industrial uses. It is either capacity to process or heat, in other words, often.

Power Budgets -The Real Constraint Nobody Talks About

When it comes to edge deployment, it all begins with a single question, what is your power budget?

I have witnessed the lack of success in projects which occurred due to the teams selecting potent processors and neglecting the power delivery.

Field sensors, which are battery powered? With 100-500 milliwatts you are working. Powered industrial controllers on the wall? 15-50W is usual. At 5G base stations, edge servers with the requirements of 50-300W become acceptable.

Fanless vs. Active Cooling

The reason why factory Web of Things mostly utilizes the fanless design is obvious fans accumulate dust, degrade and introduce mechanical failure points. Fanless is thermo limiting though.

The real-life situation goes like that: Intel Atom x6000E actually peaks at approximately 15W, and can operate without fans indefinitely. Drive an Intel Core i5 to 28W, and you must have a efficient thermal-design, heat sinks, thermal pads, perhaps even heat pipes. Intel Xeon 6? The reason is that forget fanless unless you are doing exotic liquid cooling (which I have only seen in high value 5G deployments).

ARM processors shine here. Cortex-A320 provides a competitive performance at much lower power consumption. ARM has an incomparable energy efficiency when it comes to battery-powered applications- wireless sensors, wearables or mobile gateways.

Real-Time Processing Where FPGAs Come in.

In order to establish truly deterministic latency ( consider microsecond-scale consistency), conventional processors reach break even points. And that is where FPGAs (Field-Programmable Gate Arrays) are used.

One of the manufacturing lines that I consulted required sub-milliseconds to check the quality control. Variable latency Software on CPUs brought even 2ms-latency occasionally, 15ms The CPUs. FPGAs provided us with stable response times of 500 micro seconds since they are hardware programmable, not instruction processor based programs.

The trade-off? FPGAs have specialized development skills and are not as flexible to general purpose computing. However, they cannot be replaced by critical control loops.

Operating System Considerations

Depending on what your processor is, you will have an OS option:

- Linux: Supports Intel Atom, Intel Core, Intel Xeon and Intel high end ARM (Cortex-A). Ideal general-purpose edge computing complete software ecosystems.

- RTOS (Real-Time Operating Systems): this is needed in deterministic control. It can be found on ARM Cortex-M microcontrollers, and it is supported with real-time Linux patches on selected Intel Atom variants.

I’ve deployed both. Linux provides flexibility and speed of developement. RTOS provides safe timings to the applications.

5G and Multi-Access Edge Computing (MEC).

It is at this point that edge computing might become interesting. 5G networks extend compute to the periphery of cellular infrastructure, literally, inside or close to cell towers.

This place is dominated by Intel Xeon 6 processors. They support huge parallel processing: user plane application (data packets routing), network security (firewalls, deep packet inspection), and content delivery. As the data obtained by Intel claims, Xeon 6 provides 4.2x the 5G UPF performance as compared to the previous generations.

Cloudlet Architectures

Cloudlets Imagine the cloudlets as small scale data centres between the devices and the cloud, such as at cell towers, at the edge of an enterprise, or at regional aggregation points.

The architecture that I have heard working:

- Sensor/ endpoint device ARM or Intel Atom.

- Cloudlet periphery: Intel Xeon 6 as an aggregation, preprocessing, local inference.

- Cloud: Batch analytics, fulfilling training and long-term storage.

This is a more cost-effective tiered solution because latency is minimized (devices receive sub-50ms responses to cloudlets) but the training is inexpensive (costly processing occurs on a centralized cloud where all GPUs are shared).

Getting Started-What I Would Do Different.

Given that I am starting an edge computing project now, here is how I would do it:

Start with power budgets. Regular thermal limitations should be defined prior to processors selection. Fanless? This is Intel Atom or ARM that you are looking at. Active cooling acceptable? Intel Core or Xeon 6 opens up.

Prototype on dev boards. Intel provides NUC, which is powered by Atom and Core. ARM does have Cortex-A evaluation boards. Benchmarks by themselves are not to be trusted, even of your actual workload.

Plan for thermal realities. Those processors with commercial temperatures (0-70°C) would be suitable in offices. The deployments have to be industrial (0-85degC or broader). This was a hard lesson to me when a warehouse deployment slowed when it was hot.

Take into account hybrid architectures. ARM, Intel Atom, and Xeon 6 should be used as energy-limited sensors and gateways, and regional aggregation, respectively. Assign processors to workloads rather than configuring workloads to a uniform architecture throughout the workload.

The Bottom Line

Edge computing processors are not general purpose. Intel Atom is too good in ultra-low power IoT gateways. Intel Xeon 6 is used in dominating high-throughput edge servers and in 5G infrastructure. ARM-based architectures triumph on energy consumption when it comes to battery-operated and thermally-constrained applications.

The trick here is to fit the capabilities of processors to your particular constraints: power budget, thermal design, real-time needs and workload requirements. I have witnessed applications utilizing various budget ARM chips settle, or off-the-shelf processors such as overpowered Intelfail, at varying rates, all due to failures by the team to consider thermal rules.

When you are designing industrial loT systems, autonomous robots, or video surveillance networks, define your power and thermal budgets. Then choose the processor that matches existent constraints and satisfies the performance requirements. All the rest, software framework, cloud integration, security, etc. are not as difficult when you have the right silicon underpinning.

Also Read:

Laptop Processors Compared: Intel Core Ultra vs AMD Ryzen AI

AI Accelerators Explained: TPU vs NPU vs GPU for Machine LearningAI

I’m software engineer and tech writer with a passion for digital marketing. Combining technical expertise with marketing insights, I write engaging content on topics like Technology, AI, and digital strategies. With hands-on experience in coding and marketing.