Look, I’ll be honest. Last year, I considered AI chips as nothing more than too expensive fancy GPUs. Then I began to dig into the reasons behind spending of 40,000 dollars per unit of H100 when other players were quietly opting to switch to Google TpUs costing a third of the price. I was changed and this altered my thoughts in regards to AI infrastructure completely.

The point is as follows: the market of AI chips does not only pertain to the sheer power anymore. It is about performance dollar and power consumption and being bound to an ecosystem of a single vendor. This is concerning whether you are starting a startup that needs to train models with limited budgets, running an enterprise infrastructure or whether you are interested in understanding the future of AI hardware.

Why Everyone’s Talking About Custom AI Chips Right Now

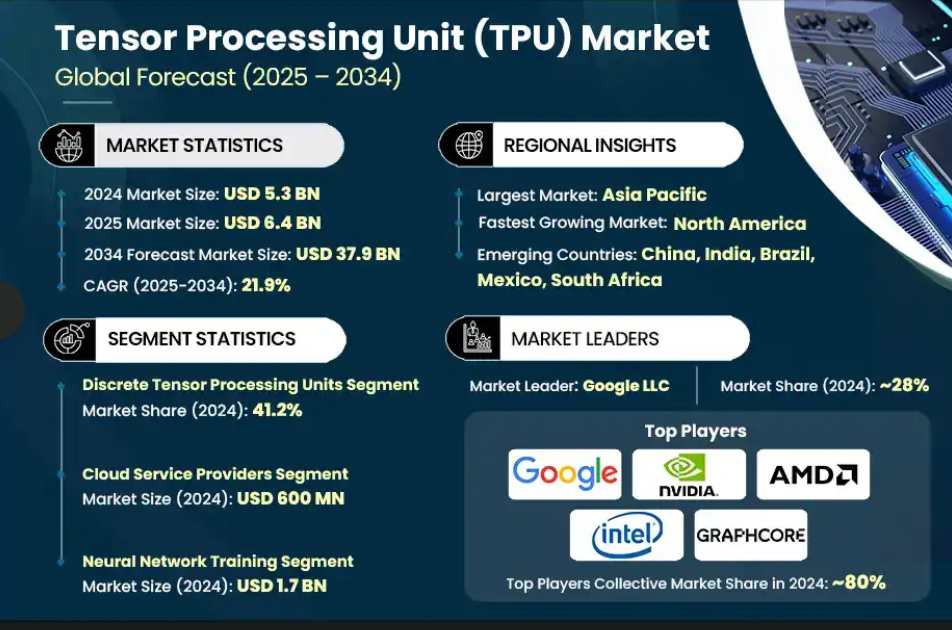

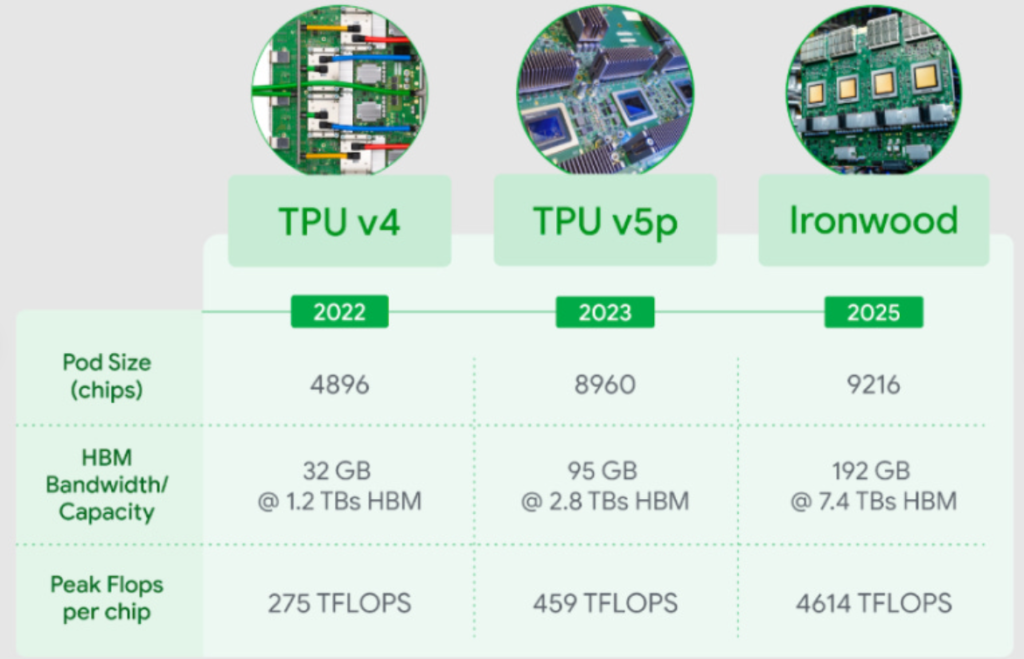

The GPU monopoly is cracking. NVIDIA maintains a market share of more than 90 percent of the data center AI sector, yet it is developing at a rapid rate. The TPU Ironwood, the seventh-generation chip of Google, has also recently been launched with 4,614 teraflops of FP8 performance, and 192GB of high-bandwidth memory. It is almost 4.5 times faster than the last generation memory bandwidth.

However, here is where I took notice, TPU v6e is up to 4x better on a performance-per-dollar basis on transformer models compared to NVIDIA H100s. When you bring in committed-use discounts, TPU rates can go as low as -0.39 per chip-hour. That would be H100 pay on AWS at 3.90/hour or close to 7/hour on Azure.

The idea of Meta to abandon billions of dollars to install Google TPUs in their data centers by 2027. Claims that Trainium3 by Amazon can reduce the AI cost by 50 percent over the clusters of H100. These are not mere experiment projects but gigantic company alterations.

The NVIDIA H100 vs H200 Reality Check

I spoke to one of my friends who is running the ML workloads in production. Last quarter he had an upgrade on H100 to H200. The numbers? H2O00 has a rate of more than 31,000 tokens per second which is 45% of H100 with a rate of 21,806 tokens/second.

With a memory capacity of 4.8 TB/s, the H2O0 almost doubles memory (141GB vs 80GB HBM3) capacity. On workloads that are memory-intensive (with individual workloads that can be very large), additional headroom is important (such as serving a large language model). The thing is, though, that you continue paying the same amount of $25,000-40,000 per unit, power consumption remains similar, about 700W.

In perspective, it is sufficient power to operate a small household. Take that and multiply that by hundreds or thousands of GPUs in a data center and the cost of energy is an enormous line item.

Breaking Down the Major Players

Google Cloud TPU – The Cost Efficiency Champion

The strategy of Google is unique. They are not selling you hardware, they are just selling you access to it on Google Cloud. In April 2025, though, the TPU v7 (Ironwood) was brought out with an inference-first system. The TPU v7 is 100 percent more energy efficient at a higher frequency than TPU v6, which is already providing 67 percent in energy efficiency improvement over TPU v5e.

I did some calculations: TPU v5e can train Llama 2 7B at cost of about 0.25 at 3 years of committed prices. That is much cheaper compared with deployments based on H100 taking into consideration networking and data egress expense.

The trade-off? You are imprisoned in Google Cloud. Switching CUDA to the XLA compiler stack of TPU requires recoding, recoding performance, and even a complete change of programming model structure, such as JAX. That is the actual obstacle to migration in many organizations.

AMD MI300X – The Dark Horse Challenger

AMD is currently making attempts to topple the MI300X of NVIDIA. It has a memory capacity of 192GB of HBM memory which is higher than that of the H100 and H200. The ROCm software ecosystem is going to grow up but it is still miles behind the CUDA in terms of library coverage and familiarity with the developer.

MI300X hardware is not personally tested by me, but industry tests indicate that it is competitive under some workloads. Availability is the greater question. The problem of chip shortages and the challenges of ramping up the production of the chip imply that MI300X is not yet an easy procurement in large quantities.

MTIA of Meta and Dojo of Tesla When You Build Your Own.

Meta has optimized its custom AI accelerator (MTIA) to inferential, rather than training-oriented. They are not competing to train large-scale, in hundreds of millions of requests per day, to recommend them, they are aiming at the several billions of requests per day that Facebook, Instagram, and WhatsApp are doing each day.

This is the reverse case due to Tesla Dojo chip, which is trained to optimize their autonomous driving neural networks. At the point where you own the whole stack, including data collection and deployment, then custom silicon is reasonable. For the rest of us? It is unlikely to be even worth the matter of the billions of the R&D.

The Real Costs Nobody Talks About

Energy and Cooling Infrastructure

This is what surprised me the most when I conducted a research on AI: it is projected that the energy requirements of AI will rise by half a year by 2030. The movement of data within chips uses more energy than the computations.

Professional cooling systems to support high-density GPU/TPU applications are an addition of 15-25% to the cost of infrastructure. You are not spending on a skim- Package H100 that costs 40,000, you are investing in Temperature-controlling and power distribution transparent systems, and specialized liquid cooling. Those indirect costs are important when considering hardware.

The Memory Bandwidth Bottleneck.

The situation of increasing discrepancy between compute capabilities and the memory access speeds continues even with such an improvement as HBM 3e. This issue in the wall of memory affects the speed of training and the latency of inferences directly.

The 7.37 TB/s per chip bandwidth of Ironwood is helpful, though model sizes are increasing at a rate that goes beyond the capability of memory technology. Memory bandwidth is a major constitution in the face of hardware improvement that has an impact on overall cost of ownership.

How to Actually Save Money on AI Compute

Spot Instances and Committed-Use Contracts

This strategy was tested by me: there are spot instances that can reduce the price by up to 90% in comparison with on-demand pricing. The fact that AI training is fault-tolerant with proper checkpointing makes non-critical training with spot instances thousands cheaper.

Multi-year contracts also have a significant impact on cost-saving by as much as 40-60 percentage through committed-use discounts. Negotiating volume based discounts are economically feasible in case you have predictable workloads. The savings associated with doing this with one startup that I was aware of was a reduction in Cloud AI bills to 55% with 3-year committed TPU pricing.

Model Optimization Beats Hardware Upgrades

Scale-up models by restricting the amount of money you spend on them before expanding them. Knowledge reduction, quantization (less precision, 32-bit to 8-bit), and model pruning can reduce the seen as 50-70 percent of the requirements in GPUs or TPUs with minimal or no harm to performance.

I got to see a composition of a group that saw their inference expenses decrease by a factor of 100 as they mixed up quantization with planned batching. They kept the quality but reduced costs to less than half a penny a million of tokens.

Regional Arbitrage and Multi-Cloud Strategy

Prices on clouds run amok geographically. The overall AI compute is significantly less in AWS Mumbai and Google Cloud Sao Paulo, as compared to the regions in the US. Using Singapore as a training location, ByteDance can save money without compromising the performance.

The gimmick is that one should consider whether training in lower cost areas, with a minor sacrifice in latency, gives overall better economics. In case of a workload of batch training, this is completely accurate.

Learning Resources That Don’t Cost a Fortune

NVIDIA’s Free Course Collection

The following free courses at NVIDIA are quite decent:

Best Open-ended RAG Agents (8 hours)

Artificial Intelligence datacenter (optimization of a GPU workflow)

Further details on the article are provided at the conclusion of the article.

Quicken Data Science Processes.

These aren’t fluff. They are practical, hands on training which in fact teaches about the methods of optimization.

Google Cloud & TPU Training.

The Professional Machine Learning Engineer certification of Google Cloud (two hundred dollars exam) involves the implementation of production ML systems. Their AI practical workshops prepare you with Vertex AI. FOCU guides Typically, TPU architecture guides are found in TensorFlow and JAX tutorials.

To get a more significant picture of the world of AI hardware, it is possible to borrow books on AI Accelerators Explained to gain more insight on how various chips handle AI loads.

Free GPU/TPU Orleans for Testing.

The use of Google Colab has access to K80 and T4 GPUs free of charge with unlimited usage. Kaggle provides 30 hours/week access to GPU. The first free tier of AWS SageMaker is 250 hours a month within two months.

These will not ever substitute the infrastructure of production, however, they are the right way to go to learn the art of optimization and not spend money. I spent dozens of hours in Colab trying different model versions to commit to paid infrastructure.

The Chip Shortage Reality

The limitations are not making rapid gains in supply chain. The production capacity is still a bottlenecked part in HBM production with very few manufacturers producing in volume. The companies are finding it difficult to find adequate amount of the newest chips and have to make trade-offs on the timelines.

Capital intensity sets up obstacles even to large technology firms. Creating competitive AI infrastructure takes billions -billions to develop custom silicon, create data centers and data center operations. It is not a game mid-market organizations can play off so easily.

To gain a more technical insight into the manufacturing and design of these processors, other materials such as the Complete Guide to Semiconductor Chipsets can be used to gain a background of the architecture behind them and the difficulties with their manufacture.

What Actually Matters for Your Use Case

For Training Large Models

In the billions of cells, you require bare computational might in case you are training foundation models. It has H100/ H200 clusters or TPU pods. The argument is profitability: is it worth spending on $15,000 -$40,000 on a GPU or is the price dedication at TPU a wiser choice?

For Inference at Scale

Custom chips excel in inference. This is the very use case that Google Ironwood 7, Amazon Trainium, and MTIA are aimed at. Inference-optimized silicon has the smart choice because it has lower latency, improved power efficiency and costs that are dramatically reduced.

For Edge Deployment

The Grace Blackwell architecture by NVIDIA also allows the deployment of clouds to the edge with no code changes. This is important when the latency is important, such as autonomous vehicles, real-time video processing scalability where cloud round-trips cannot be used.

Looking Ahead

The GPU monopoly process really is over but migration costs can still tie many organizations down due to software ecosystem requirements. Multivendor strategies have remained difficult due to the need to depend on software ecosystems. The ten-year history of optimization of CUDA produces actual switching costs.

However, this is the thing that I return again and again so far, when it comes to large PPUs and their cost-effectiveness: It has been independently determined that TPU deployments are 4-10 times more cost-effective than GPUs when it comes to training large LLMs. That does not happen to be fringe, that is a game-changer to anyone operating AI in scale.

The growth of AI will probably face energy drawbacks in the next 5-10 years in case the current growth is maintained. Energy efficiently building structures should not be an issue that comes later but something that organizations must consider when planning to go long-term.

My advice? Begin by using free learning materials. Have practical experience with Colab and Kaggle. Sign on TPU v5e a trial run first of very costly H100 clusters. Optimize them and then purchase larger hardware. And take heed to this: it is not he that is the fastest chip that makes the best chip; but that which gives him the performance he requires at the business cost.

The competition of the AI hardware is not decelerating. Now you understand where to look, what not to, how to be smarter in your decision making regarding infrastructure and not spend a lot on hype.

I’m software engineer and tech writer with a passion for digital marketing. Combining technical expertise with marketing insights, I write engaging content on topics like Technology, AI, and digital strategies. With hands-on experience in coding and marketing.