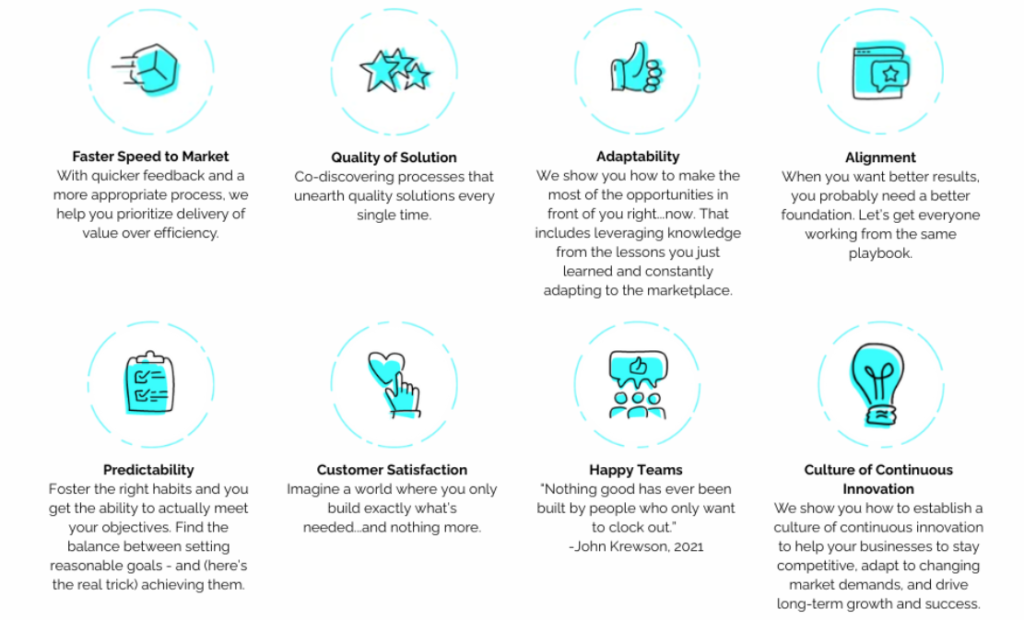

Introduction – Why 40% of Enterprise AI Agents Fail at Scale

The bad news is as follows: 61 percent of businesses are already developing AI agents, however forty percent of those deployments will not succeed to reach full production volume. I have seen this trend occur in more organizations and the causes are inhumanly the same.

International architecture 70% of such failures is due to poor integration architecture. The problem is that teams underestimate the complexity of monitoring, do not consider the governance frameworks at all, and create unrealistic expectations regarding what agents can really deal with during the first month. The change management piece? In many cases a second thought once users revolt.

This article reveals to you how to become part of the 60 percent success. Not with theory or vendor sales presentation – with a realistic roadmap that takes note of the ugly reality of enterprise systems.

The timescale that most teams would actually require 4-8 to have something running – basic, 12 to have actual pilot with real production traffic, and 6-12 to reach full-scale rollout across numerous departments. Anybody claiming to be faster is lying or has not worked with your legacy infrastructure.

We can deconstruct how to actually do this.

Pre-Deployment Assessment – Know What You’re Getting Into

Organizational Readiness Check

Audit these five areas before ensuring that you write even a single line of code:

Executive sponsorship: This is not an option. Lack of a VP or C-level champion that comprehends the 6-12 month commitment and budget reality means your project gets dead in month four when the first setback occurs.

Budget allocation: Real numbers. Calculate costs of computer, finding a talent, training, licensing the tool and integration. Pilot phase runs $500-2K monthly. Scale phase jumps to $5-15K. Full governance enterprise deployment? Starting at 50K/month, but goes into ROI in 3-5 years.

Skills inventory: Do you have engineers with knowledge of AI who are conversant with timely engineering and LLM conduct? Engineers who can put to rights your API zoo? Ops individuals who have constructed non-deterministic system monitoring? Even when you need two of the three you want to hire then get them in advance.

Cultural preparedness: Adoption yield to job displacement phobia is more lethal than mechanical breakdown. Had your organization not already approached the will AI take my job discussion in an open manner, it is time to do so.

Legacy system compatibility: Document all the systems that your agents are going to touch. How many lack modern APIs? What is the number of the ones that need screen scraping or RPA bridges? Such complexity of integration is the predictor of your schedule.

Use Case Assessment Framework.

There are five questions to answer, comparing to each possible use case:

- So what is the issue with this solution? General answers such as the improvement of efficiency fail. It works with the following reduction: reduce the invoice processing time, which was being 4 days, to 6 hours.

- Is it automatable? Repeat tasks, Rule based, high volume tasks that have clear success criteria are best suited to best candidates. Agents who do deal with subtle judgment outcomes or actually new situations? That is phase three not phase one.

- What’s the ROI? Add the amount of time saved divided by the costperhour and error reduction value. You can not achieve 3x ROI in year one and this is why you should choose another use case.

- What’s the risk? Financial exposure, compliance implications, customer effect in case of failure by the agent. Start with low-risk domains.

- What’s the effort? Complexity of integration + data availability + needed change in the organization.

Prioritization Matrix – Where to Start

Two axes of plot: ROI vs. effort/risk.

Easy money (High leverage, low risk, low ambition): begin here. These are money-saving and the confidence of the organization.

- FAQ automation of customer support (usually deflection of 60% tier-1 ticket)

- Working with invoices (volume, repetitive, unambiguous rules of validation)

- In access to lead (unambiguous scoring structure, instant responses of sales)

- HR policy question/answer (24/7, simple to develop knowledge base)

Medium all dimensions: Your phase two tends.

Complex projects (high ROI, high risk, high effort): Phase three, you need to prove yourself first.

Hard-to-sell wastes (low ROI, high risk): Don’t exist.

I have witnessed teams that have disregarded this prioritization and jumped directly to the complex and high-stakes use cases since they are more impressive. They are also the locations of 90-percent failure of first-time implementations.

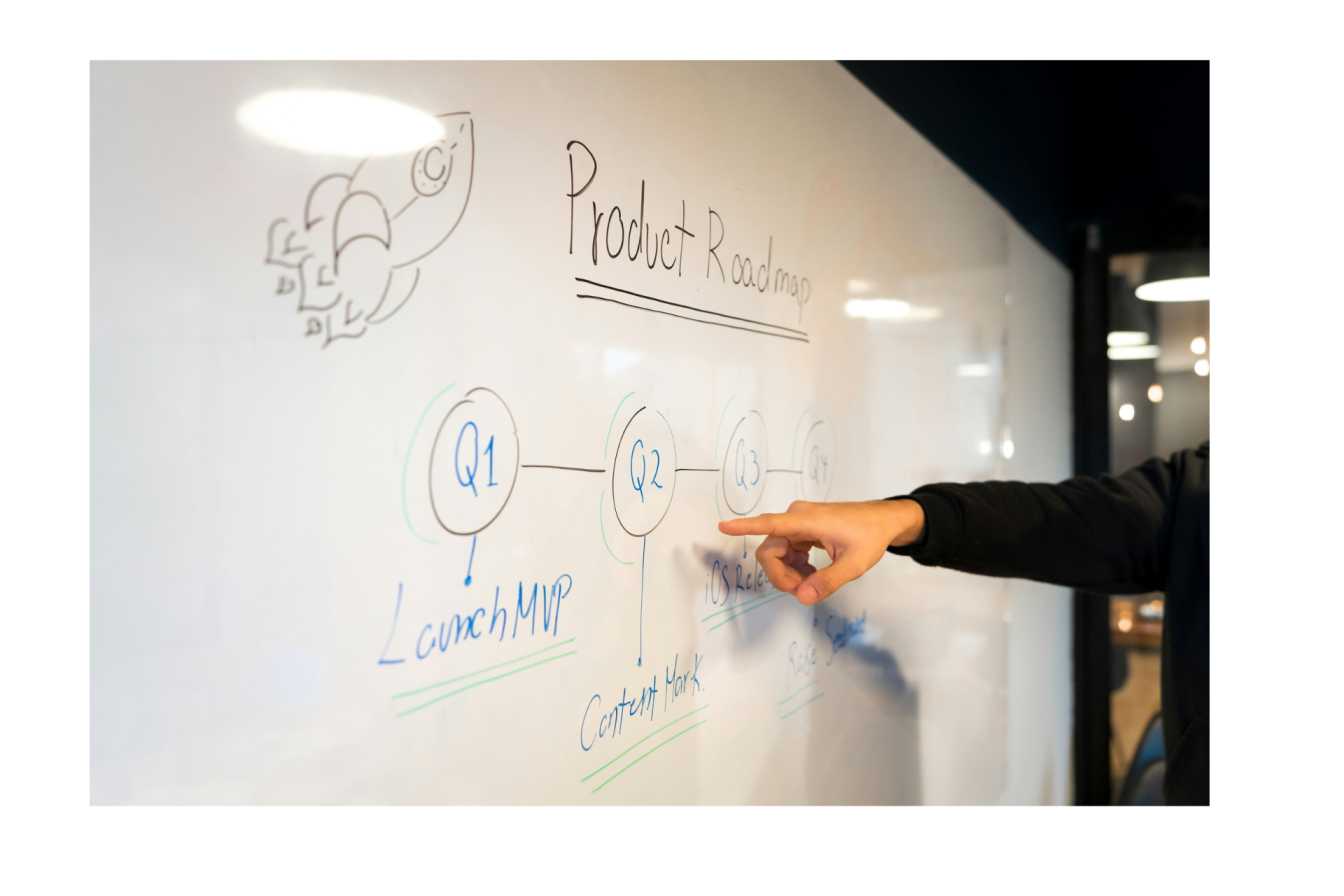

Phased Implementation Roadmap – The 18-Month Journey

Phase 1 – Pilot (Weeks 1-12)

Scope: One single use-case, one single department, low volume of traffic (beginning with 10, will reach 25 at most) crystal-clear success.

Team structure: 5 persons or more:

- AI engineer (LLM proficiency, timely engineering)

- Integration engineer (data pipelines, APIs)

- Operations engineer (monitoring, Incident response)

- Business owner (requirements, success validation)

- Security/compliance officer (Pilots can not skip this even in pilots)

Timeline breakdown:

Weeks 1-2 : A requirements and process mapping. Trace out the existing human process step-by-step – each decision point, each exception case, each touched system. My experience demonstrated that when teams do not go through this anal mapping they will not add 4-6 weeks of debugging unexpected edge cases subsequently.

Weeks 3-4: Construction of the framework and setting up the environment. It is my suggestion that the pilots begin with LangChain or AutoGen – full ecosystems, well-documented, fast T2V. Chatbot: GPT-4/Claude Sonnet. No need to create tailor-made infrastructure yet.

Weeks 5-8: Agent development. A basic agent logic, integrate with 2-3 critical systems, basic guardrails. That is the place where you learn what APIs turn out to be actually usable and what one should have workarounds.

Week 9-10: Testing and optimization. Run in a sandbox with data that is like production. Load testing at double the capacity – 70% of teams do not do this and regret it in the case of scaling.

Weeks 11-12: Minimal production roll-out. Begin with 10 percent of traffic with human inspection of all agent actions. Monitor obsessively. Team syncs every day during the first week, two times per week the following.

Go/no-go decision success criteria:

- The 85 percent task completion rate (without human intervention).

- 4/5 user satisfaction score

- No zero critical failures (lost data, hacked, rule violation)

- Evidential ROI (even in the small amount)

In case you hit three out of four then to phase two. A scale of two or less: stop, diagnose and then scale.

Phase 2 – Scale (Months 4-9)

Scopes: Pilot to 100% traffic, 1-2 new use cases, add additional departmental use cases.

Team expansion: 8-10 people. Relevant products Add product manager (prioritization, roadmap), one data scientist (model optimization), one change manager (adoption, training).

Timeline:

Month 1: Use case Scale pilot use case between 10% and 100% gradually. Be aware of poor performance, performance explosion, and edge case which are not seen at volumes.

Months 2-3: Implement new use case on the basis of pilot lessons. Re-use where it can – integration connectors, monitoring dashboards, guardrail logic.

Month 4: Matured governance. Introduce centralized agent registry (identity, owner, permissions and data access of each agent). Include enforcement of high-risk behaviors of policy. I observed that this is the point at which the teams discover that the justice model of security of their Pilot could not be scaled.

Months 5-6: Optimization of the processes with the view to production telemetry. Orchestrate tune hints, schedule tool pick logic, do tune API calls in a manner that exhibits lower latencies and cost.

Success criteria:

- Reduction of cost greater than 20% compared to prior manually used process.

- Adoption in multi-departmental and documented impact on the business.

- <1% critical incident rate

- Audit trail governance framework in operation.

- 80% user adoption rate

Phase 3 – Enterprise (Months 10-18)

Requirement: 5-10 application cases, 20-50 individual agents, enterprise application suite including full compliance.

Team: AI Center of Excellence, successful in 15-30 people comprising of engineering, operations, security, change management, business enablement.

Timeline:

Months 10-12: Standardization. Reusable structures, common components, uniform along with governance procedures. This is where you stop using projects and adopt platform.

Months 13-15: Multidepartment roll out. Activities, Sales, Operations, Finance, Expanded Support. All the departments modify established patterns to their activities.

16-18 months: integration and optimization. Allow agent interphone (e.g. Support agent initiates Finance agent to process refund). Build executive dashboards. Constant performer performance and cost.

Impact of business at this phase:

- 30% of knowledge work automated

- Enhancement in productivity of agent based workflows by 40% improvement.

- 60-80% cost saving compared to manual performance.

- Competitive advantage in customer responsiveness or efficiency of operation which can be measured.

More foundational concepts regarding the working of these multi-agent systems under the hood may be found in the concept Multi- Agent Systems.

Technology Stack Selection – What to Use When

Pilot Phase Stack (Weeks 1-12)

LangChain or AutoGen: Framework. The two have well-documented and mature tool ecosystems.

LLM: GPT-4 or Claude Sonnet 4. Wait until you have more data related to your domain – you do not need to fine-tune, and base models are astonishingly useful in most tasks in the enterprise.

Platform: Cloud-native Cloud AWS, Azure, and GCP. Use managed services. Bedrock Agents or Azure AI Foundry Agent Service include security, scale and observability.

Cost: $500-2K/month

Risk: Low

Speed: Fastest time to value

Scale Phase Stack (Months 4-9)

Framework: Add CrewAI regarding role-based multi-agent design, or complex state workflows offered by LangGraph. I have used both – CrewAI is simpler to use when there is a simple multi-agent setup; LangGraph is more precise when it comes to complex orchestration.

LLM: combination of closed-source (GPT-4, Claude) and open-source (Llama, Mistral) to do complex reasoning and do tasks with large volumes, but of lower importance. Optimize though the terminology of domains.

Platform Hybrid cloud + sensitive data on-prem. Include governance layer (Zenity to Bedrock, or home policy engines).

Cost: $5-15K/month

Governance: control, fundamental compliance.

Enterprise Phase Stack (Months 10-18).

Framework: Multi-framework coordination. LangGraph as orchestrator, which is special agents implemented CrewAI or AutoGen based on application.

LLM: Hybrid between closed-source and open-source and fine-tuned models. Workloads that are sensitive (like databases) can be deployed on open-source models on your VPC.

Platform: There is a multi-region, hybrid, and complete compliance suite (SOC2, HIPAA, GDPR depending on the state of your industry).

Cost: $50K+/month

ROI: 3-5x within 18 months

Framework Decision Matrix:

- Need it working in 2 weeks? – LangChain or AutoGen

- Building multi-agent teams? – CrewAI or AutoGen

- Require concurrent workflow fineness? – LangGraph

- Enterprise governance day one? – Has-managed platforms (Bedrock, Azure AI Foundry)

Integration Strategy – Where 70% Fail.

The Integration Challenge

Seventy percent of agent deployments have integration problems. Not integration – not model selection.

Why? Enterprise systems are a nightmare: they have varying authentication schemes, uncommon data formats, temporarily-named APIs that have been describe as Protocol Data in 2012 and now bear load, and systems that do not have any API at all.

Systematic Integration Approach

Step 1: Integration inventory

Name all the systems that will be touched by your agents. For each, document:

- The quality/availability of API (REST, GraphQL, SOAP or “pray and scrape”)

- Authentication method

- Performance characters

- unrestricted rates and rate limits.

- Data format and schema

- Proprietorship and approving agents.

Priorities: critical (agent breaks down without this), important (less extent of functionality), nice-to-have (additional feature in the future).

Step 2: Select the approach of integration.

Ready-made connectors (easiest, 2 weeks): in case your framework or LangChain has a connector, use it. Don’t rebuild.

Custom API development (moderate, 4-6 weeks): Systems that use APIs, but no existent connectors. Direct but time wasting.

Event-driven integration (flexible, 6-8 weeks): to support asynchronous business process and cross-system coordination. More complicated initial, scales to pay off.

10 weeks and 4 weeks: Both legacy systems and routine evaluation of new systems.<|human|>last resort (RPA bridge, 8-10 weeks): API-free legacy systems. Incorporate UiPath or like that as a bridge agent to legacy UI. Bone-thin and yet somewhat needed.

Step 3: Integration: Ruthlessly test.

It is an obligatory yet inadequate sandbox testing. You need:

- Load testing: 3/4s of integration failures only manifest themselves under load. Test 2X expected maximal volume.

- White box testing Handling slow API responses (>5 seconds), API failures, rate limits, invalid responses.

- Failure recovery: What does API call failure in the middle of the workflow mean? Does the agent retry? Escalate? Corrupt state?

- Security testing Authentication failure, token refresh logic, data sanitization.

Step 4: Observation of integration wellbeing.

- Success rates of API (goal: >99 caution applications)

- P50, P95, P99 percentile Latency monitoring.

- Auto- retry logic An exponential backoff is a failure alert with autoretry logic.

- Graceful degradation (agent transfers to co-pilot mode in event of failure of critical API)

Team & Skills Required – Who You Actually Need

Core Roles (Non-Negotiable)

AI engineer: LLM skills, immediate engineering, familiarization with the behavior and constraints of a model. Should have capability of troubleshooting why an agent took a certain decision.

Integration Engineer Backend development, design of API, data pipeline creation. It is your biggest needy employment – they open it all up.

Monitoring and reliability engineering through maturity of non-deterministic systems Operations engineer Operations engineer Familiarity in observability tools (Datadog, New Relic, or home-built telemetry).

Security / Compliance Manager: Governance, audit trails, risk assessment. May not be a part-time or an afterthought.

Product Manager: Priorities, requirements, stakeholder and vicious scope management.

Optional But Valuable

Data Scientist: To optimise and fine-tune models, architecture of evaluation frameworks. Needed at scale, not in pilot.

Change Manager: Adoption programs, user training, change in the organization. Critical at stage two and onward.

Business Analyst: Huge understanding of the field, process leadership, requirement assessment.

Training and Development

Do not think you will be able to employ the specialists in enterprise agentic AI – they are almost nonexistent. Plan for:

- Framework-related training (weeks 1-2): Authoritative classes, documentation dungeons.

- Best practices and trends (weeks 3-4): Research of good examples, governance structures.

- Learning in the field (completely ongoing): Pair programming, code reviews, post-mortems.

- Engaging the community: LangChain created community, slack groups in AI engineering, vendor office hours.

Budget 20 percent of engineering time in month 1- 3 learning and experimentation.

Frequent Failures and the Recipes to prevent them.

Mistake 1: Starting too big

A single use case/department/ 10% traffic. Prove it there first.

Mistake 2: Failed integration (70% of failures)

Right approach: Testing and integration inventory prior to the beginning of agent development.

Mistake 3: The lack of proper monitoring.

Right approach: Track each agent decision, tool call and result since day one. You can never make what you can not measure better.

Mistake 4: Forgotten about governance.

Right approach: Pilots should also have agent registry, permission models and audit logs.

Mistake 5: Lack of change management.

Right approach: Educating the users, being open about the role of AI, feedback element.

2nd error: Having unrealistic expectations.

Right approach: Any completion of tasks 80% in the first month is a victory. 95 percent or above in 6-12 months of repetitions.

Mistake 7: Over-engineering

Right approach: MVP first. Test something defective that functions and repeat using actual usage experience.

Conclusion – Your Next Steps

Enterprise-level agentic AI implementation is not a 6-week sprint, but a 12-18 month one. The successful organizations that have it also consider it as a leveled power building rather than an experiment of science.

Begin with your pre-deployment look up this week. Locate three fast-uses cases. Assemble a core team of five. Create a framework (LangChain is the most popular, but other ones can be selected). Set a 12-week pilot timeline.

The 60 percent of them that make it are not necessarily smarter or more capitalized. They are more disciplined on starting small, testing properly and scaling in a methodical outcome based on evidence and not excitement.

Agents are already in action on your competition. It is not whether you should start but whether you will be in the 60 of successful or 40 of the failed.

I’m software engineer and tech writer with a passion for digital marketing. Combining technical expertise with marketing insights, I write engaging content on topics like Technology, AI, and digital strategies. With hands-on experience in coding and marketing.