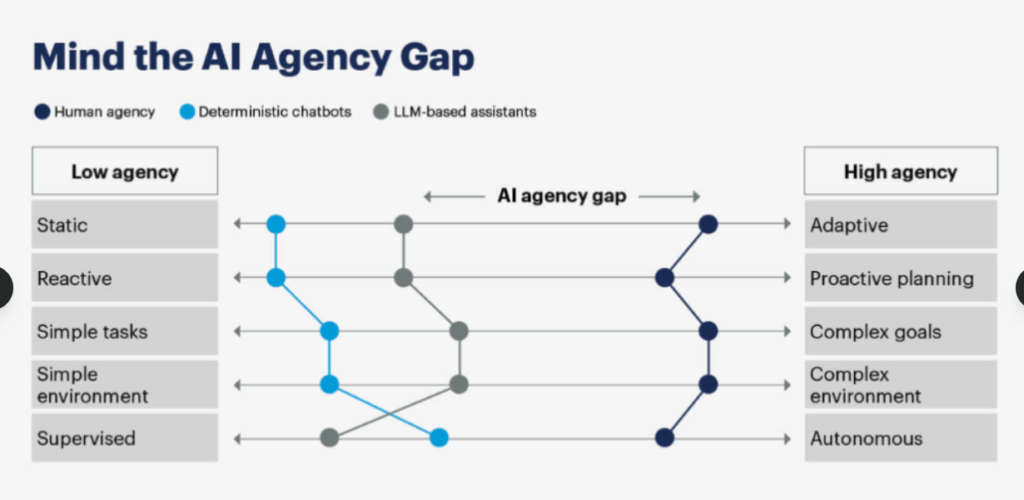

The debate on artificial intelligence has taken a radically different turn. It is no longer about chatbots that respond to the frequently asked questions or algorithms that suggest products. Business agents of AI are of a different nature: they are autonomous systems planning, decision making, and executing intricate workflows without human supervision.

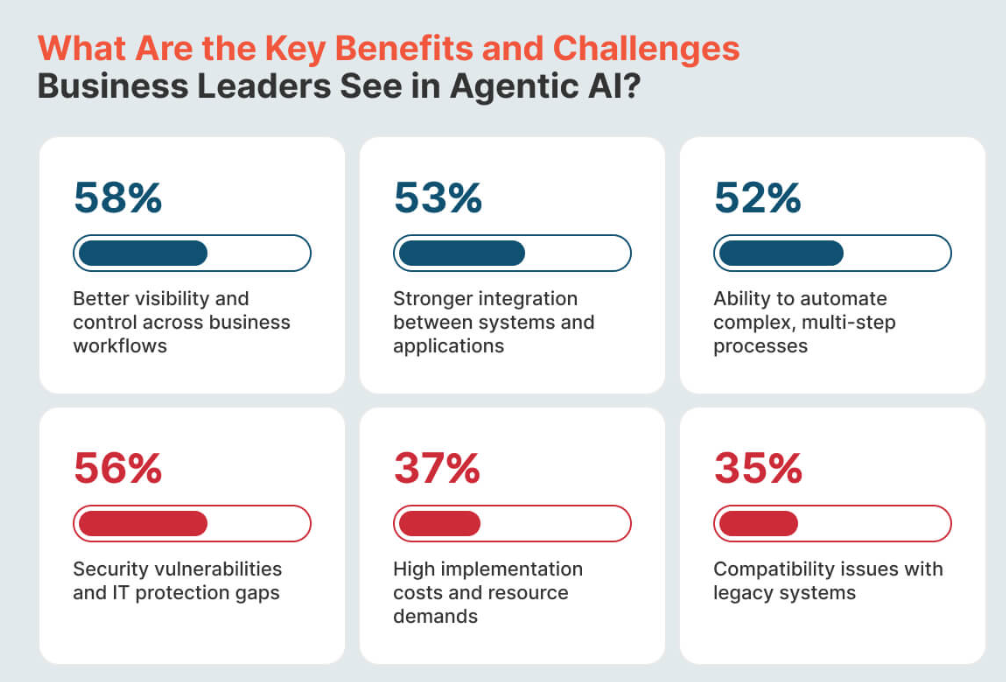

In 2024, the market size of AI agents reached 5.4 billion across the globe and it is projected to skyrocket to 47.1 billion by 2030 at a compound annual growth rate of 44.8. That’s not speculative hype. Companies are registering productivity increases of 40-60, savings of cost by 25-40, and enhancements on revenue of 20-30. However, according to Gartner, by 2027, more than 40 percent of agentic AI projects will fail to move on to integration as a result of complexity and poor ROI in the integration.

This article dissects what is actually winning, what is still under experiment as well as how businesses can maneuver this change but not fall into the usual traps.

What Makes AI Agents Different from Traditional Automation

Conventional automation is scripted. When X occurs, then do Y. Chatbots find keywords in replies. The automation of robot processes clicks in determined sequences. Such systems are violated when something untold occurs.

The operation of AI agents is different. They are composed of four architectural elements, namely, large language models as reasoning engines, memory systems to save context across interactions, external tools and APIs to take on real-world actions and orchestration layers to manage multi-step workflows.

It does not fail or blow out of proportion when a customer service agent faces a refund request that is not within the normal parameters. It rationalizes the circumstance, inspects company history, examines business procedures, and decisively makes a choice–or intensifies with full total when the human judgment truly is required.

The change is done not by reactive systems that react to the occurrence of problems but proactive systems that predict problems before they happen and avoid them. A conventional IT monitoring system identifies the shutdown of a server and sends notification. An agentic system is aware of performance degradation warning signs at an early stage, automatically redistributes workloads, and corrects the situation before users can sense that the system has gone down.

Current State – What’s Actually Working Right Now

Customer Service Reaching True Autonomy

The AI agent Fin of Intercom has responded to more than 13 million customer inquiries of the 4,000 customers. They are not just little Where’s my order? queries. The system automatically accesses account data, returns and updates subscriptions and end to end resolutions. The first-contact resolution rates reported in organizations stand at 50-70 percent with the 24/7 availability.

When evaluating a few of the agents at the customer facing end, I realized that the difference between simple chatbots and actual agent systems is evidently noticeable. Agents are capable of switching context in the middle of the conversation, of recollection of past interactions, and do not subject users to a decision tree.

Supply Chain Systems Making Real-Time Decisions

One of the world electronics makers had agentic AI inspecting 200+ suppliers around 15 countries. When suppliers of semiconductor became scarce in southeast Asia the system pointed to alternative suppliers, attempted to negotiate emergency contracts and rerouted deliveries before human managers even learned about the problem. Outcomes: 30 percent decrease in the inventory carrying costs and reduction in on-time delivery by 25 percent.

This isn’t theoretical. The supply chain agents are working round the clock on production settings, trying to adapt to market conditions, weather shocks as well as geopolitical occurrences in real time.

Financial Services Preventing Fraud Proactively

Banks have implemented agents to constantly monitor endpoints in networks and the pattern of user behavior. In the case of anomalous data access control indicative of insider threats, systems block user privileges, place quarantine on adversely touched systems, and gathers forensic proofs- preventing infractions prior to human security practitioners being warned.

Conventional fraud alerts on suspicion. Threats are held back and managed by agentic systems.

HR Operations Running End-to-End

One technology company automated all the employee lifecycle. The system gets candidates across all platforms, initial screening, scheduling interviews based on availability, personalized onboarding, and prevents flight risk employees by proactively identifying them during a proactive effort to retain them. The result: 50 percent less time to hire, 35 percent better retention and 30 percent lower HR operating expenses.

The Next Frontier- What’s Emerging in 2026

Extended Reasoning Changing Capability Boundaries

The latest hedge in the foundation models, such as Claude 3.7 Sonnet, or open AI o3-mini add lengthy step-by-step reasoning. The models also give greater computational allocation time to the challenging issues so that the agents get a chance to test various solutions to different problems, improve strategies step by step, and solve multi-steps problems that were before left to human decomposition.

This higher justification allows the agents to escape the hard automation to the adaptive problem solving where execution strategies change dynamically based on the circumstances and real-time feedback.

Multi-Agent Systems Replacing Monolithic Architecture

The next paradigm of architecture is the move of single autonomous agents as multi-agent systems. Instead of having monolithic agents that operate the full working process, specialized agents interact with each other, one one specializes in retrieval of data, the other in analysis, the other in execution and the other in verification.

In research processes, this could be might be the use of agents who may engage in literature review, hypothesis generation, experimental design, and result validation roles and coordinate these activities. This manner of distribution allows more effective automation of complicated problem spaces.

Reducing Hallucination Through Better Grounding

Though there is still the problem of hallucination, architectures are becoming more accurate with the help of the improved grounding mechanisms. Internet-based Fact-Checking/False Information Generation Retrieval-Augmented Generation (RAG) systems which combine real time data feeds, fact-checking applications and confidence ratings are mitigating the creation of false information.

I have tried to test many different agent frameworks, and the fact that systems based on RAG always performed better than purely generative ones became clear, in all cases when a system needs to be factual. Novel assessment systems also allow agents to do self-assessment with respect to outputs inconsistencies before submission.

Venues such as Best AI Humanizere Tools Compared 2025 might offer more insight to organizations that are worried about the content and truth of AI-generated content, and how companies are working to either control the quality of their AI outputs or not.

Real Business Impact – What the Numbers Show

The ROI is being monitored by organizations using AI agents on several levels:

Operational Metrics:

- Automated functions increase productivity for 40-60 percent.

- 25-40% savings in operations cost.

- 30-50% of reduction in process finishing times.

- 50-70% resolution rates in customer service (first contact).

Strategic Metrics:

- With increased responsiveness, there can be an increase in revenue between 20-30%.

- The employee retention increased by 35 percent at the active intervention of the HR.

- Predictive supply chain management has reduced cost of holding inventory by 30 percent.

Risk Metrics:

- Breach prevention Before it strikes, busted in real time.

- Constant checking of compliance to minimize violation of regulations.

- Maintenance of the system proactively that will save unforeseen downtime by a quarter.

According to BCG study, ROI increases with time as the agents learn and enhance, unlike with the inert software whose benefits remain constant. A fully developed deployment can pay off in 12-18 months.

Critical Implementation Challenges

Integration Complexity Remains the Biggest Barrier

Majority of organizations use disjointed technology stacks. CRMs do not interact with ERP systems, financial platforms have individualized data compared to Hr systems, and analytics solutions run in silos. These disconnected environments need to be orchestrated by agentic systems, which have to be custom-built API, data mapping, and real-time.

About one out of every 5 companies has architectural debt or inefficiency in workplaces that cannot smoothly implement AI. This is not a small technical challenge, it is the main factor of why pilot projects never succeed when they are trying to scale.

Data Quality Determines Success

Large-quality domain-specific data is needed to train the robust agents. Numerous controlled sectors experience issues related to data scarcity because of the lack of compliance with confidential information. What is more, 80 percent of enterprise data is unstructured: emails, documents, pictures, logs, and agents are not able to find any predictable training data in such a heterogeneous data.

The use of old or unfinished datasets is very dangerous to come up with inaccurate results. The essence of the issue: garbage in, garbage out is more problematic in the field of autonomous systems that would make a decision without human involvement.

Multi-Step Accuracy Degradation

Though the individual AI-driven predictions are expected to be as accurate as 95 percent the accumulated error is 60 percent over a sequence of 10 decisions overall. Compounding uncertainty is brought by agents that make independent decisions at every single step.

I have engaged a number of agent systems on complex workflows and have found that in the absence of good validation layers and human in the loop checkpoints to decision making that has high stakes, its accuracy cannot be relied on when considering important business processes.

Governance and Liability Are not clear.

It is not an algorithm that is legally liable to decisions and actions of the AI system but rather the organizations. Accountability is uncertain when carving out decisions that touch on customers, employees or even operations by autonomous agents. So what executive does what in case an agent authorizes a loan on the basis of prejudiced data? In what case is an agency liable when the actions of the agent result in a disruption in the supply chain?

Lack of recognized structures of governance poses organizational risk. There is a tendency that agent-to-app relationships are usually not centrally viewed. Unmanaged APIs are prone to cybercrimes and as autonomous agents are communicated between systems, security lapses will spread quickly without human efforts.

Cost Barriers for Mid-Market Organizations

Production-grade agents have large infrastructure requirements, including high-performance from the model training, scale to real-time inference, dedicated talent to time-sensitive engineering and machine learning processes, and maintenance expenses. Businesses are recording infrastructures of more than 500K every year on mid-sized agent deployments.

What looks like a mere feature request might involve significant architecture redesign and result in a huge cost increase. The skills deficit of ML engineers, prompt engineers, and AI governance specialists has instigated remuneration to 30-40% over industry rates.

Strategic Implementation Framework

Phase 1-Discovery and Opportunity Mapping

The path to the implementation of agentic AI starts with profound organizational analysis. Determine high-value automation opportunities through examination of business function operations in terms of operational bottlenecks, decision points, and manual workflow.

Rank opportunities on two dimensions; impact (how much value can be created or cost can be saved) and feasibility (technical and organizational complexity). Quick wins Quick impact low-complexity opportunities gain organizational trust and funds other complex projects.

The roadmap of priority is a typical example, which can be support with automation to customers initially (defined use case, obvious ROI), supply chain optimization (increased complexity, protracted payoff), and autonomous decision-making (most complex, high risk).

Phase 2-Technology Selection and Proof of Concept

Majority of organizations begin with use-cases that are narrow-focused and have low agent autonomy, with human supervision high. A customer service representative dealing with refund requests also works with set parameters: only specific types of refund can be accepted, use business rules in every case, and solve new cases.

Assess frameworks according to particular necessities. In the development of general-purpose agents, LangChain provides modularity and ecosys flexibility. In the case of multi-agent systems to production grade, CrewAI offers role-based coordination. AutoGen allows creating flexible collaborations between agents in the case of research and experimentation.

Choose language models depending on cost, accuracy, latency and privacy. GPT models by OpenAI are the most generalized models that are dependent on cloud services and priced per inference. Privacy and control are provided by the open-source models such as Llama, but the models need an investment in infrastructure. Claude of Anthropic is a more sophisticated thinker, however, and more expensive to inference.

In case the organizations are considering the larger environment around AI tools than agents, The Complete Guide to Generative AI Tools for Business in 2026 offers an inclusive overview of complementary technologies that can collaborate with agentic systems.

Phase 3-Integration and Data Foundation

Effective implementation of agents needs three basic components:

Data Preparation:

- Test current sources of data regarding quality, uniformity, and completeness.

- Install data administration that guarantees the agent access to pertinent data.

- Establish feedback loops of agent decisions that will be used to constantly improve.

- Validation Checks used to designate agent access to sensitive data.

API and System Integration:

- Identify issues that currently hold APIs and develop bridges to those issues.

- Install authentication and authorization in which agents are only able to access authorized resources.

- Design roll have come up to allow the prompt intervention in case of agent behavior deviation.

- 形ulate audit histories of all agent activities to comply with and debug.

Monitoring and Observability:

- Establish-success metrics other than getting the job done (accuracy, efficiency, getting it done in line with values)

- Install live dashboards to monitor the performance of the agents, their error rates and customer satisfaction with them.

- Develop warning mechanisms to signify any abnormal behavior or debased accuracy.

- Form human decision-making units on big decisions.

Phase 4-Governance and Continuous Improvement

Prior to agents performing their duties at scale, define a system of governance defining:

Decision Rights: What can autonomous agents decide? What must be approved by human beings? What is the spending limit, restriction to access, and action limit?

Accountability Structure: Who will be in charge of agent performance, accuracy and actions? Designate particular positions of the “Agent Owners” responsible towards each autonomous system.

Human-in-the-loop on high-stakes decisions, frequent performance audits, and feedback loops to empower agent development.

Ethical Principles: establish the permissible application, ban biases in actions, promote transparency in the determination of an agent, and establish the explainability.

Organizations that integrate AI agents on a scale will attribut

e somebody design authority on agent processes and imposing control structures and developing human-in-the-loop fallbacks. This demands structural and cultural evolution such as redefining the role of IT such that it encompasses agent management capabilities as well.

Proven Use Cases Across Industries

Customer Support Automation: Retail, technology, and telecommunication corporations can reach 50-70% first-contact resolution and can reduce support workload by 60%. Medium complexity.

Supply Chain Optimization: 30 percent reduction in cost of manufacturing as well as logistics and 25 percent improvement in delivery. High complexity.

Lead Qualification and Scoring: B2B SaaS and finance industries boast of 45 percent conversion and 30 percent sales cycle. Medium complexity.

Loan Portfolio Management: Banking and insurance automated risk evaluation and compliance. High complexity.

HR Operations: Enterprise services save time-to-hire by half and enhance proactive retention. Medium complexity.

Fraud and the Threat Detection: Financial services and payment processors facilitate real-time detection and preventative action as an anomaly. High complexity.

Contract Analysis: With clause extraction, Legal and enterprise organizations are 80 times faster in document review. Medium complexity.

Predictive Maintenance: Predictive maintenance results in a 25 per cent decrease in unplanned downtime by manufacturing and infrastructure. High complexity.

Demand Forecasting: Retail and supply chain maximize dynamic price and stock. Medium complexity.

Monitoring Compliance: Controlled industries introduce monitoring and regulating reporting. Medium complexity.

Free Learning Resources for Teams

In the case of organizations developing internal expertise, there are a number of free resources of quality:

The 5-Day AI Agents Intensive provided by Google on Kaggle addresses the basics of agents, integrating tools, multi agents, and implementation. It is offered by the ML researchers of Google and it has arguably the most complete crash course.

The GitHub repository Microsoft AI Agents teaches beginners the enormous 12 lesson study, introducing users to the concept of artificial intelligence through to creating systems that run on production scale. Video materials, examples of Python code, and additional materials on further education are also present in every lesson.

The Multi-AI Agent Systems using CrewAI of DeepLearning.AI offers a Masterclass on orchestration and agent coordination by the creator of CrewAI Joao Moura. Ideal or best when one needs to know the collaboration of many agents in the workflow of any complex problems.

Regarding the open-source AI community, Hugging Face AI Agents Course is the place where some theoretical knowledge and practical examples to work with pre-trained models coexist.

The LangChain Agent Cookbook and LlamaIndex Agent Cookbook offer recipes that developers can use to implement common agent patterns, tool integration and workflow orchestration as well as provide real-life examples of building retrieval-augmented generation agents.

Frequently asked questions about AI Agents.

What is the distinction between AI agents and the old-fashioned chatbots?

Chatbots are reactive to specific questions and react to them with pattern matching or script. AI agents design multi-step workflows, decide in an autonomous fashion guided by reasoning, have contextual memory over interactions, can implement actions with the help of external tools, and adjust their strategies to real-time feedback. The agents go on working behind the scenes on automated complex tasks; chatbots monitor user interaction so that they know the correct responses.

How fast is the creation of an AI agent?

The development of a proof-of-concept of a bounded use case normally requires 4-8 weeks with a seasoned group. Agents at production grade, which have to be thoroughly integrated, governed and tested, are 3-6 months. Data readiness, complexity of the integration of the legacy systems and change management of the organization are critical in Timeline.

How much to have as a minimum investment?

An infrastructural pilot project and small team with less than 5 people can begin at 50K-150K. Enterprise level implementations starting with production scale are estimated to cost $500K-2M a year based on the cost of infrastructure, size of team, and scope of operations. Open-source frameworks have low costs in terms of licensing, but need engineering skills.

Of course, AI agents are autonomous.

Contemporary agents work with autonomy to some extent depending on how they are designed. Lots stakes decisions (authorizing financial operations, making changes to contracts with customers, etc.) generally contain compulsory human intervention. The decision-making process that is routine (generation of reports, meetings scheduling, notifications sending) may be highly independent. Human-in-the-loop architectures with agents dealing with 80-90% of the cases are built as the most effective but can use exceptions that must be dealt with by a judgmental agent.

What are the biggest risks?

Major threats include cascading errors (accuracy loss in making multi-step decisions), unforeseen autonomous behaviour (agent acting contrary to industry regulations by interpreting goals in a broad manner), privacy invasions (access to sensitive information by an unauthorised person), non-compliance with regulation (agents making actions that are in conflict with the established industry regulations), and reputation damage (agents being unable to make such decisions to the satisfaction of customers).

What is the way that organizations measure ROI?

Conventional ROI structures are inadequate. Organizational metrics that should be monitored include operational (cost per task, processing time, accuracy), strategic (customer satisfaction, market responsiveness) and risk (compliance violations, error rates) metrics. ROI is ideally faster with time as agents become learned and with the mature deployments, the result could be when returns are delivered in 12-18 months.

Can we use open-source models?

Open-source models (Llama, Mistral) are appropriate to most applications, particularly those that need data confidentiality or where the model behavior is needed to be controlled. They are less expensive, although they need infrastructure and maintenance teams. Custom APIs (OpenAI, Anthropic) are simpler in their approach and offer future functionality but deal with data transmission and per-inference expenses. A typical approach with most enterprises is a hybrid approach using proprietary models on reasoning-intensive processes and open-source on routine classification and extraction.

Whom do we avoid being biased?

Detection of bias needs to be done at multiple levels: audit training data must be represented and fair, fairness constraints in agent aims and reward functions, disparate impact in agent decisions across demographical lines monitored, weekly bias audit and human review activities, and explainability where agent reasoning is expressed in human language. There is no technical solution that can be single-handled and it requires governance and constant observance.

What skills do we need to hire?

Such positions as ML engineers (model development and optimization), Prompt engineers (designing effective instructions), AI Governance specialists (adherence and compliance), Data engineers (data pipeline and Aggregations), and AI Ops engineers (supervision and ad hoc maintenance) are required. The roles of data are transforming to agents rustlers of several coordinated agents instead of conventional data wrangling.

What is the realistically forecasted time of mainstream adoption?

Gartner estimates that by 2026, 40% of all enterprise application programs will have task-related AI agents, compared to less than 5 percent in 2025. Nevertheless, 4 out of ten agentic initiatives will die by 2027 because of poor ROI and implementation issues. In 2029, 70 per cent of businesses will have implemented agents in at least one business area, although the applications applied will differ significantly.

Strategic Imperatives for 2026

Artificial intelligence as an agentic model is a real technological change. It is also noteworthy that as opposed to the past waves of AI which aimed to enrich the work done by humans, the autonomous coordination of intricate workflows in this shift changes the manner in which work occurs.

The way ahead is to compromise three imperatives:

Speed with Governance: Organizations that build AI governance models early in their lifetime move with more speed afterwards, eliminating duplicative control work. Those who put off governance experience stalling pilot projects when they go to scale.

Realistically Ambitious: The most effective applications begin with small, high-value applications when ROI is evident and risk is controlled. It is here that success creates the organizational capability and financial reserves so as to make more ambitious applications.

Technology with Talent: The limiting element is the availability of technical AI talent. Organizations that invest in the development of its workforce and develop attractive roles of the so-called agent architects and governance specialists will beat the competition based on the services of external advisors.

The 44.8 percent compounded annual growth rate that is expected to play out in the AI agents market up to 2030 is a true business value creation and not a hypothetical hype. Nevertheless, that value can only be captured through effective strategic thought, sincere evaluation of organizational capacity, and adherence to levels of governance that are corresponding to autonomy.

Organizations that take decisive actions will win disproportionate value. Individuals who advance slowly expose themselves to ability loopholes that only add to each other as they progress. The businesses that are going to be on the forefront come 2026 are making such decisions today.

I’m software engineer and tech writer with a passion for digital marketing. Combining technical expertise with marketing insights, I write engaging content on topics like Technology, AI, and digital strategies. With hands-on experience in coding and marketing.