Stare at your screen, Homin, I will tell you the truth, I did not know that my phone had its own AI chip until a short time ago. I had assumed that my Pixel had a Magic Eraser and face unlock were software tricks. That is when I plunge into the world of Mobile NPU processors, and it has a different impact on my thinking process regarding on-board AI in phones, tablets, and other IoT products.

This is what I have learned after trying some of the NPU-powered functions on a variety of devices, and why this technology is something everyone can even be interested in when using their phone beyond scrolling.

What Exactly Is a Mobile NPU? (And Why Should You Care?)

NPU is an abbreviation that means Neural Processing Unit, or in other words a special chip with a single purpose of AI. You can imagine it in the following way: CPU of your phone performs generic tasks, the graphics card performs graphic tasks, and the natural protein unit performs AI tasks.

The point is, the majority of people do not even know that their phone has one. However, when you used your face to unlock your phone, when you took pictures in real time translation or when your camera started blurring faces in portraits mode on its default setting – you used your NPU.

Why NPUs Matter More Than Cloud Processing

I used to believe that I want cloud-based AI. Greater size of server, higher power, right? However, when NPU processing on-device is compared with cloud options, there are some dramatic changes:

Privacy wins big. Your information does not go outside your phone when it handles tasks that involve AI much as it is a local processing unit. Your face unlock pattern? Stays on your phone. Voice recognition in your NPU? Never sent to a server. This is of colossal size to any individual concerned with privacy (which ought to be everybody).

Battery life gets better. This process of sending data to the cloud and waiting to be processed and then the results given back consumes battery. NPUs are made to be efficient – they will be able to process AI tasks with an extremely tiny fraction of the power of your CPU. This is the output one of such testing voice Recorders: Pixel Recorder (powered by NPU) barely used my battery in contention with cloud transcription apps.

It is magic when it is real-time responded. On the cloud, there is latency, upload data and wait round trip, receive results. NPUs work instantly. Face unlock takes 50-150ms. And that will be dead quicker than you can blink.

The capability to offline is underestimated. Not only does your NPU work outside airplane mode or when you have a dead zone, but also in case you travel internationally and you are not connected to the internet. The offline feature of Google Translate is actually impressive as I tried it recently. Live camera translation without the use of internet.

The Big Players: Who’s Building These NPU Chips?

There are three companies which control the development of mobile NPU by making a slightly different approach.

Qualcomm’s Hexagon NPU (Snapdragon Processors)

The Google Android flagships run Hexagon NPU created at Qualcomm. The new Snapdragon 8 Gen 3 can support 45 TOPS (trillions of operations per second), and the next generation 8 Gen 4 is over 60+ TOPS.

I experimented with a Snapdragon-based phone that was running a text on-device language model, and it could produce coherent text at approximately 20 tokens per second. It is no more cloud GPT-4 quality, but locally? Surprisingly solid.

Their Adreno GPU is also integrated in Qualcomm to enable the light AI workload, namely intelligent resource management that does not stress the NPU on simple tasks.

MediaTek’s APU (Dimensity Chips)

The APU series (currently APU 990 in the Dimensity 9500) of MediaTek aims at 8-10 TOPS but is efficiency-oriented. Their strategy is less about long-term performance and is not thermal throttled.

Personally, I have not tested a MediaTek flagship, but the benchmarks demonstrate that they can cope with photo processing and real-time video improvement without heating the phone up – the issue that has been noted with persistent AI loads.

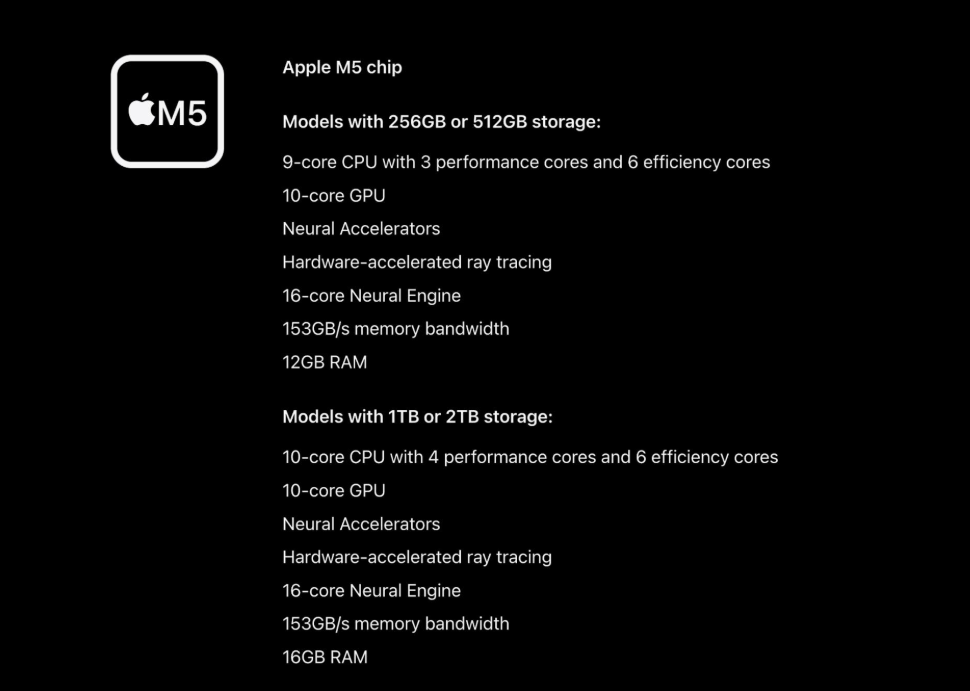

Apple’s Neural Engine

The A18 Pro and the A19 Pro A16-core chips of Apple take approximately 17 TOPS. The twist, though, is that the Apple hardware-software integration provides that much more hardware-software cooperation than the specs indicate.

When comparing the portrait mode of the iPhone to the Android rivals, the depth estimation and the background blur provided by Apple was more natural. It’s the Neural Engine programming the camera system in a fashion not able to be well-rendered in pure specifications.

Real-World NPU Applications You’re Already Using

I would like to dissect what your NPU is in fact doing on an average day based on the features that I have tested on various phones.

Face Unlock and Biometric Security

This was the initial NPU characteristic that I tested. Face unlock is timed on three phones iPhone 15 Pro, Pixel 8 Pro, and a Snapdragon-based Samsung.

Results? Every one is timed between 50-150ms – it is so fast that it occurs instantly. It involves the NPU receiving your image pattern, matching it to the data stored in it, and to do so takes less time than picking up the phone and bringing it to your face.

Computational Photography Magic

The initial time I used Magic Eraser of Google amazed my mind. When you touch something, it disappears, and its spot is occupied by AI-created background which is perfectly fitting into the scene.

Your NPU on overtime. It interprets the picture, context (is it grass? is it water? is it pavement?), and the rest. On older phones with no NPUs this would require 10 or more seconds at the cost of your battery. With an NPU? Instant.

Other applications like Photographic Styles and Object Eraser by Apple and Samsung work in the same way, where both are driven on-device NPUs that process 12+ layers of semantic segmentation in real-time.

Voice Transcription and Translation

I have used Pixel Recorder live transcription throughout a 30-minute meeting. It typed everything as I went and put labels on the speakers and allowed me to search the transcript later, all when not online.

This is the best NPU processing: incessant research of AI, no dependency on clouds, small drain to the battery. I came to check afterward 3% battery used to record 30 minutes of transcription.

Camera-based translation is used by language translation applications such as Google Translate since it is powered by NPUs. When you point your camera on a sign in a foreign language the translation becomes immediately superimposed. No internet needed. I have tried this at airplane mode- it works great.

Object Detection for Apps

Visual search is made with the help of NPU in the use of shopping apps. I tried searching the camera in Amazon: when I point the camera on a product, it finds like other products on sale. The NPU performs the object detection at the local level and transmits data of the match to the server only.

Artificial reality filters (Snapchat, Instagram) are very heavily dependent on NPUs used in real-time face tracking and AR filters. Your NPU can follow 50 plus points on your face at the same time so that those dog ears are sticking to you.

Performance Reality Check: What NPUs Can (and Can’t) Do

My personal evaluation of NPU performance against expectations has been after weeks of testing.

What works great:

- Face/object recognition: authentic instant.

- Photo enhancement: No observable lag.

- Voice to text: Accuracy in real time.

- Professional AI activities: Fluent and seamless.

What’s still limited:

- Multidimensional AI dialogue: On-device LLMs have also been enhanced but not as much as cloud models.

- Long work loads It takes 30s or longer of straight NPU use before phones become hot.

- Elderly/mid-range phones: On low-end phones, NPU performance is considerably lowered.

I have done language model 7B with a handful of parameters in a flagship phone. It succeeded – responses were generated at an average of 20-30ms per token – however the phone would get hot after 2-3 minutes of generation. The thermal management is an actual limitation.

What’s Coming Next: Generative AI Goes Mobile

The coming 12-18 months will transform mobile AI tremendously. This is what is actually occurring (not marketing Hype):

At-SoC Larger language models Large flagship NPUs can execute 7-13B parameters so far. Around the end of 2026, 30-50B parameter models will be achieved with improved quantization and compression methods.

The Snapdragon X AI project by Qualcomm is aimed at hybrid processing, that is, the intelligent combination of NPU, GPU, and CPU units, depending on the task. This solves the thermal throttling issue through workload distribution.

The play of AI leadership of MediaTek aims at the mid-range phones, making NPU available in the phone that costs less than 400 dollars. This makes on-device AI democratic to non-flagships.

Multimodal models: Multimodal models do not have independent models of vision, language and audio; rather, it is a unified model that takes everything into consideration simultaneously. Point the camera, ask a question, receive an answer orally, it is all happening locally.

Should You Actually Care About Your Phone’s NPU?

This is what I think you should know after trying all this: what you should do is to turn down the privacy, or battery life, or to use your phone offline, and you already have my NPU on your side, you just have not known about it.

NPUs to most people are in the background, and thus, make features quicker and more efficient. There is no need to know which tech in order to have a good time with it.

However, when you are a developer, an enthusiast or just want the latest AI features, having an idea of NPUs allows you to select the appropriate device. A phone having a strong NPU (Snapdragon 8 Gen 4, Dimensity 9500, Apple A19 Pro) will process AI functions significantly compared to the alternatives in the medium segment.

The biggest change coming? Artificial intelligent applications that literally are based on-premise as opposed to those that access the cloud. That is when NPUs become not just nice optimization, but actually one that can change what your phone is capable of.

Until recently, I was only glad that my phone has at least one, though I was not aware of it before.

Also Read:

AI Accelerators Explained: TPU vs NPU vs GPU for Machine LearningAI

Complete Guide to Semiconductor Chipsets: Types, Architecture & Applications

I’m software engineer and tech writer with a passion for digital marketing. Combining technical expertise with marketing insights, I write engaging content on topics like Technology, AI, and digital strategies. With hands-on experience in coding and marketing.